Consolidate your cannibalised pages

Duplicate content and cannibalisation, where two or more pages conflict with each other for rankings for similar keywords, is a mortal enemy to SEO performance.

Even the best optimised sites have a little cannibalisation, but the majority of sites we see are usually in a bad way. At an enterprise level, it can easily be in the millions of pages.

That’s an awful lot of wasted crawl bandwidth.

Put simply, sites that have this problem will contain many pages perform less well in search.

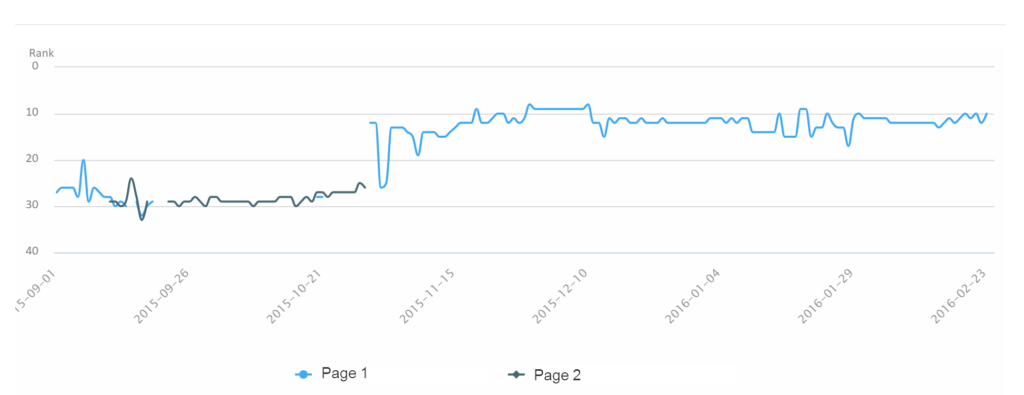

Look at the data below. In this case, we were tracking two pages that were targeting the same, competitive search term. Both pages were occupying a low 3rd page ranking for the target term.

Redirecting “Page 2” to “Page 1” and consolidating the content to build a fuller, richer page had enough of an impact to put “Page 1” in a low top 10 ranking position. No new links were built.

Image Source: Builtvisible’s Client Performance Platform

Detecting duplicate content and page cannibalisation needs careful, keyword by keyword analysis and a ranking data tool that can show multiple pages appearing for the same keyword. The potential gains are significant, though so do consider hunting for cannibalised pages on your site.

If your site hasn’t had the technical SEO attention it deserves, there’ll be a big gain to be made from performing a an analysis of your log data and correctly implementing basic technical improvements including rel=”canonical”, robots noindex, removal of unnecessary duplicate parameters, robots wildcards and so on.

Use the correct schema mark-up for rich snippets

Way, way back we learned< that having rich snippets can positively influence CTR (click through rate) in the search results. Implementing schema.org mark up on your product pages is therefore an absolute “must add” into your SEO development schedule.

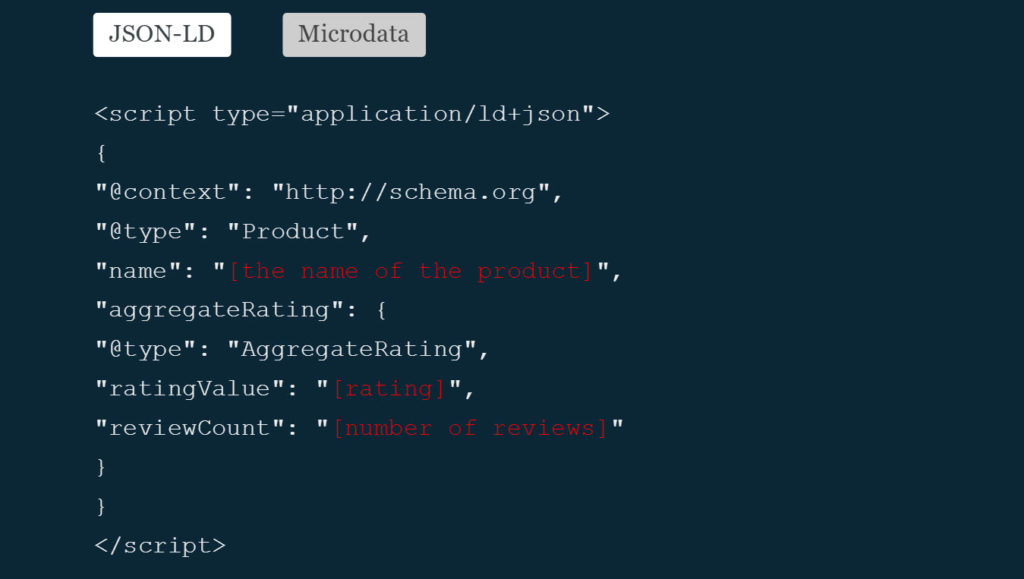

Example JSON-LD for reviews. Take a look at Builtvisible’s guide for more background and technical implementation.

As to whether you choose to implement using Microdata or JSON-LD, examples of the latter are still rare. Aaron at SEOskeptic confirmed some time ago that according to Google, a working JSON-LD implementation will generate a rich snippet for a product / review page.

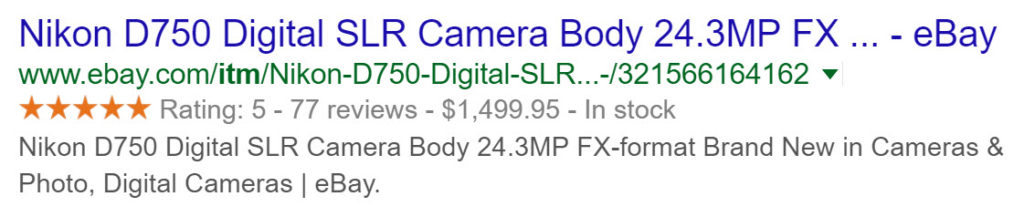

Ebay use Microdata to describe the https://schema.org/Product vocabulary in their listing pages

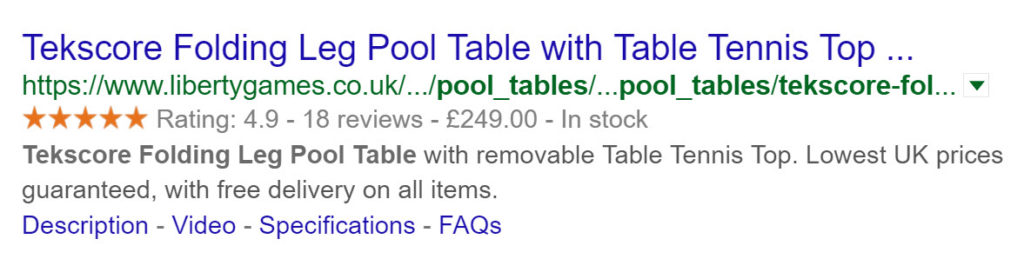

Stuart Kerr from Liberty Games showed me this product page which has both Microdata and JSON-LD implemented. As he mentioned in this tweet, it appears Google might be using the data provided only in the JSON-LD for the snippet

I’d like to feel more confident of the opinion that the data layer should be separated from the presentation layer in a product page, and therefore your testing should focus entirely on implementing JSON-LD. In reality though, I think it’s wise to have the capability to test pages that have either and find what works best for your domain.

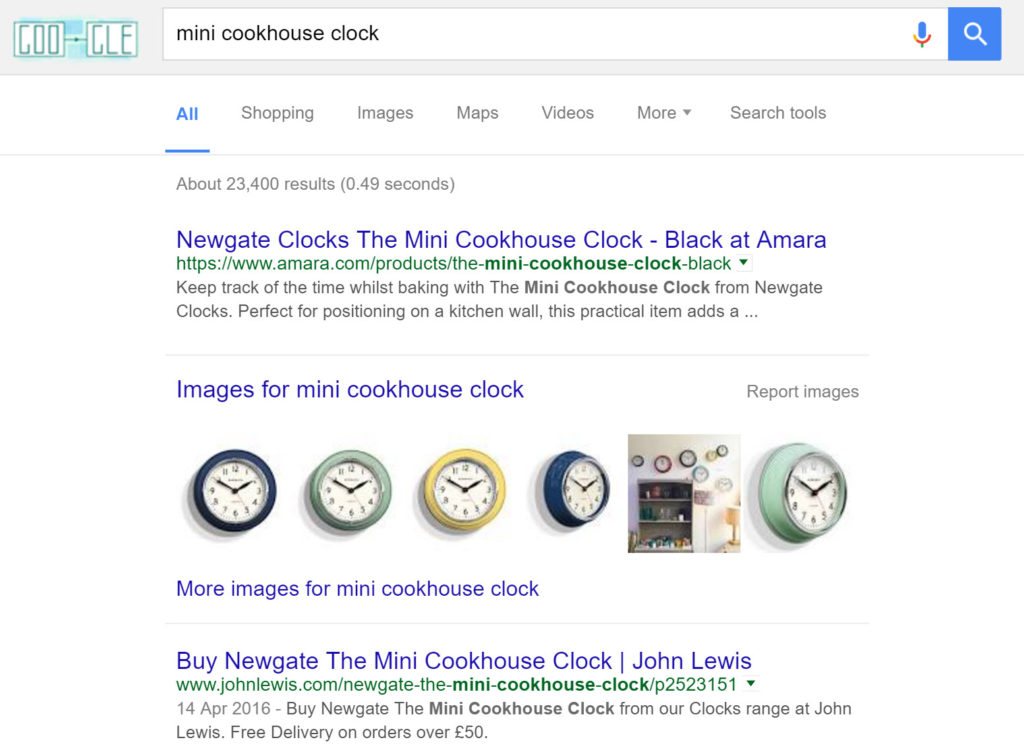

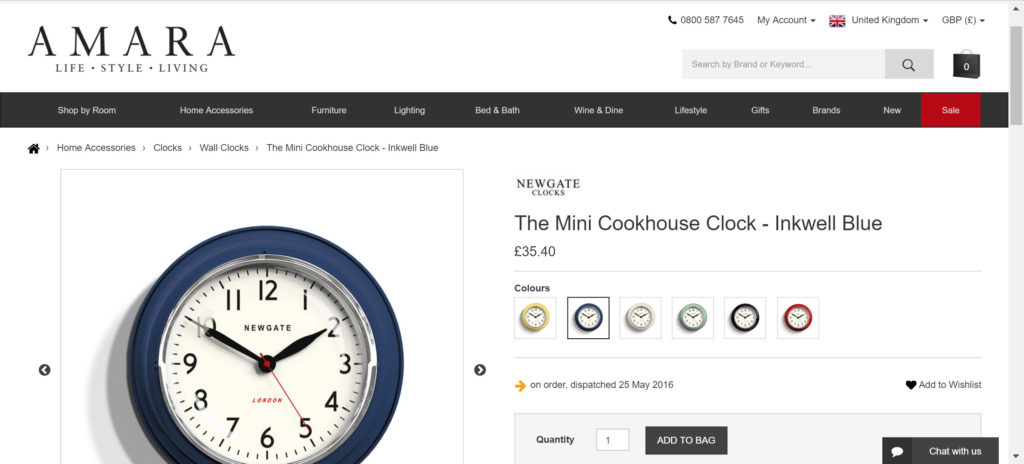

Do a good job of image search

This is a fantastic search result from the team at Amara. A top product ranking, flanked by 5 images on their domain or subdomains:

Amara provide a high res (1000x1000px) image to Google (responsive in the browser – very nice) with descriptive filenames (/products/huge/105322/the-mini-cookhouse-clock-squeezy-lemon-911892.jpg) and properly constructed alt attributes.

It’s difficult to tell but perhaps Amara have included image URLs in their XML sitemaps too.

Optimise your body copy for the long tail

We’ll be revisiting some tips to improve your product page copy later in this series because for sales performance and the long tail, high quality, persuasive text is everything.

For search, there are a number of ways that you can improve the individual performance of a product page. This is (of course) assuming that you have “unique” product copy already and you’re looking for ways to improve it. If you’re replicating manufacturer copy then you’re probably already suffering from a Panda inflicted penalty.

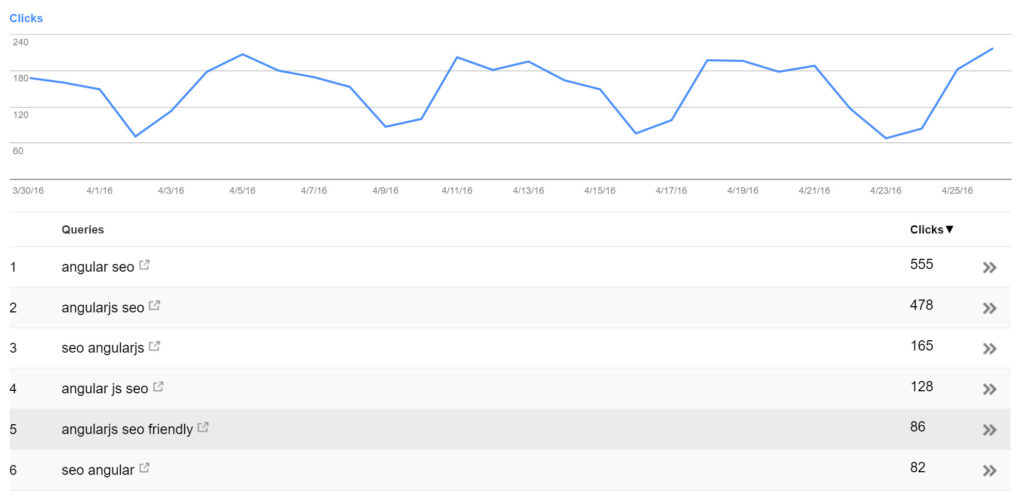

Google’s Search Console Search Analytics provides query data by URL. Select or filter for a URL and then select “Queries”.

Try heading to Search Console and filter for a high performing product page. Then, click “Queries”. You’ll be presented with a list of valuable key phrases that you have some visibility for. It’s unlikely that every phrase will appear in your body copy, so with some careful and sensible copy improvements you should be able to improve the long tail performance of the page.

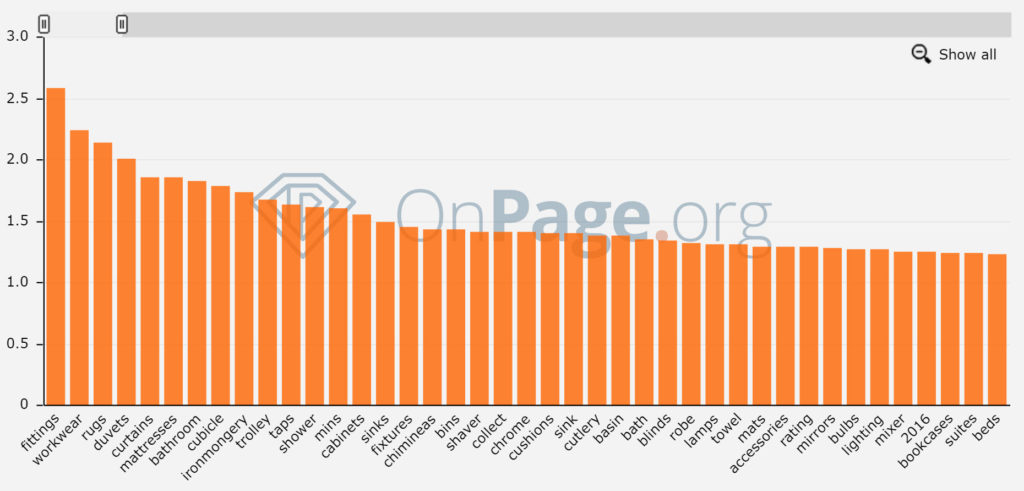

You can use a similar technique with different tools. OnPage.org’s excellent TD*IDF analyser provides some potentially powerful insight into the terms that most frequently occur on pages that rank well in a search result:

OnPage.org’s TD*IDF analyser counts term frequency for a Google Query providing a useful basis for further copy optimisation.

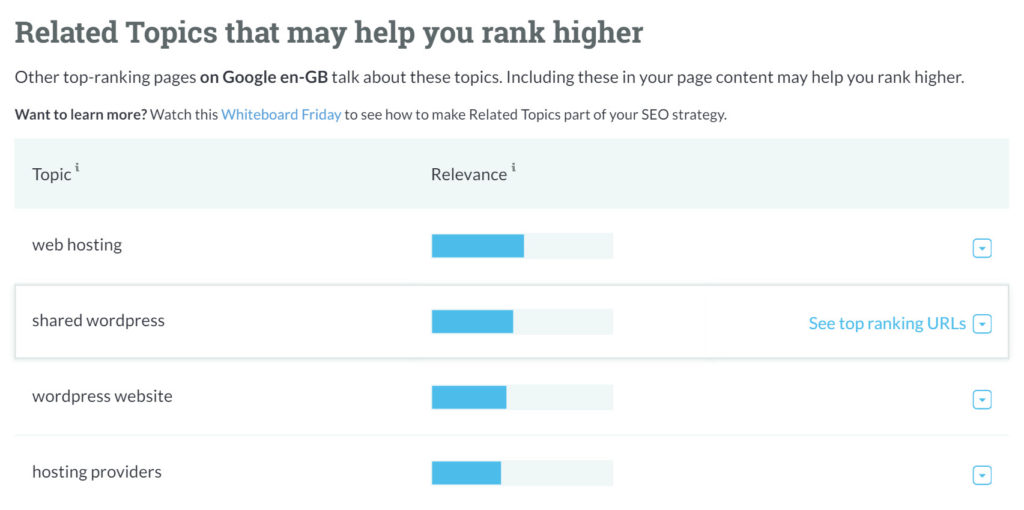

Moz provide a similar tool in their Pro toolset; “Related Topics is a new feature in Moz Pro that helps you understand how phrases and topics influence the SERP, allowing you to broaden your content and build out pages instead of devoting yourself to time-consuming research.”

Moz’s topic tool suggests related topics topics that appear on competing pages in a search result that you might want to consider covering on your own page

Site speed/performance

Tom recently gave a top presentation on the topic of site speed for content marketers at BrightonSEO.

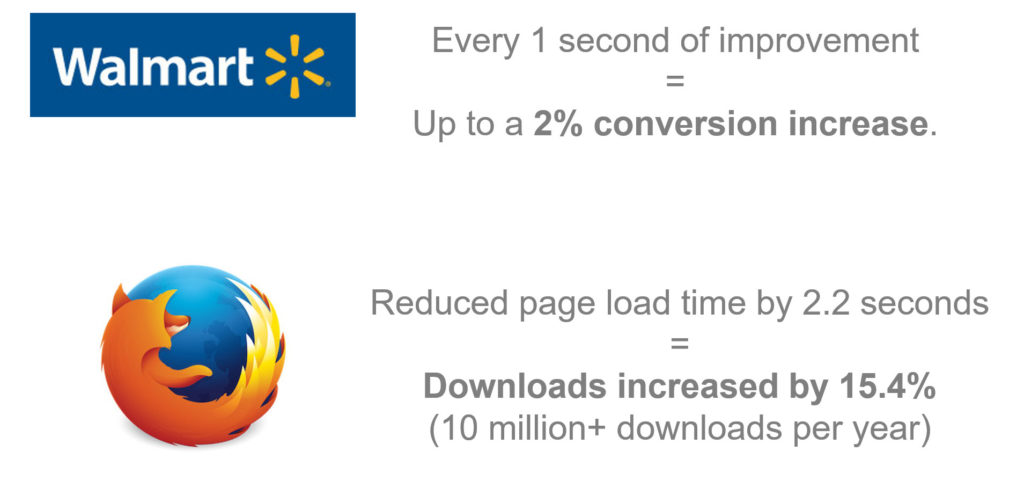

Organisations like Walmart and Mozilla have seen exponential improvements in revenue and conversion from controlled tests on site speed, yet many marketers still don’t embrace the need for performance optimisation.

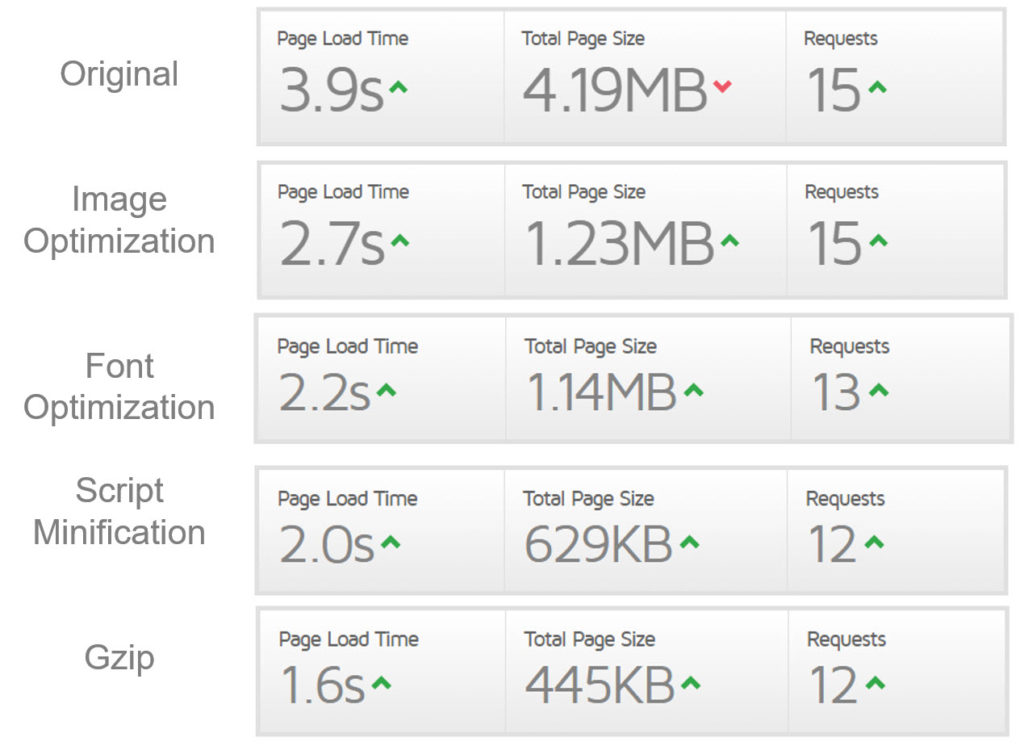

While there’s an awful lot more to page speed optimisation, some of the most notable improvements in page load can be achieved through proper image optimisation.

Look out for:

- Format & Compression – JPEG for photos, PNG for images with fewer colours / transparency. Balance file size & aesthetics.

- Dimensions – What is the maximum width and height at which the image will be displayed?

- Replacements – “The fastest HTTP request is the one not made.”

Aim to use fonts for text, vector graphics for logos and shapes, and CSS effects (shadows, gradients, etc.) wherever possible.

All in all, Tom achieved a 240% page load time improvement by implementing changes in the following categories:

Read Tom’s full presentation here.

Use prefetch & prerender

As Mike King proved, correctly implementing rel=”prerender prefetch” can dramatically improve the page load experience for the user. That’s because the browser is quietly loading the specified page in an invisible tab waiting, hoping (!) that this is the page you’ll request next.

This has potentially very useful implications for product pages on retail sites:

- By prerendering the conversion funnel basket > checkout you could speed up the perceived browsing experience of this crucial final phase in the buyer’s journey

- By prerendering the most likely next clicked link e.g. “top selling product” or the first result in your “related products” sections, you might get lucky and speed up the experience for a significant number of your users. </li

To learn more, read this article by Steve Souders.

Carry out product level competitor link analysis

What’s driving the product level query rankings for your products?

There’s something about URL by URL level analysis that seems to put some SEOs off – it’s as if the thought of reviewing the top 1,000 revenue driving pages is an impossible mountain to climb. It isn’t. It just requires a methodology to make the time used as efficiently as possible.

In my opinion, understanding the types of links required to move a search result is a foundational principle of good SEO.

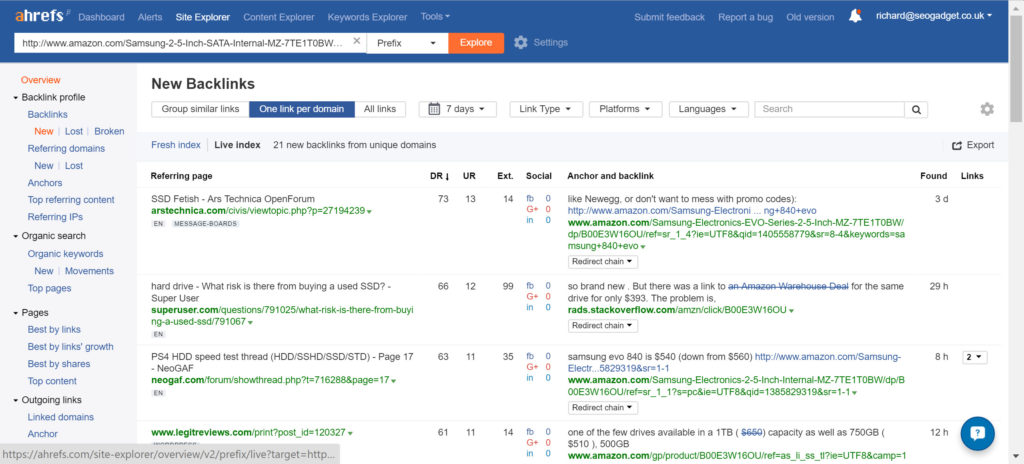

Use aHrefs for your link analysis. Consistently the best tool for the job

Take this result for a 1TB SSD (a great search opportunity if you’re in the market). Obviously, Amazon isn’t the only retailer in the search result but I’d argue they provide the baseline level of performance you’ll need to at least get near at the product URL link level to compete. Fortunately, not all of their links are good (take a look and you’ll know what I mean), but the style and type of the good links in that SERP are well worth a study:

- Affiliate sites

- Forums (technical / gaming)

- Reviews (although inevitably these result in affiliate links)

If nothing else, you should realise the value of a) *carefully* promoting products for reviews b) a good old fashioned affiliate program and c) participation in community and forum sites.

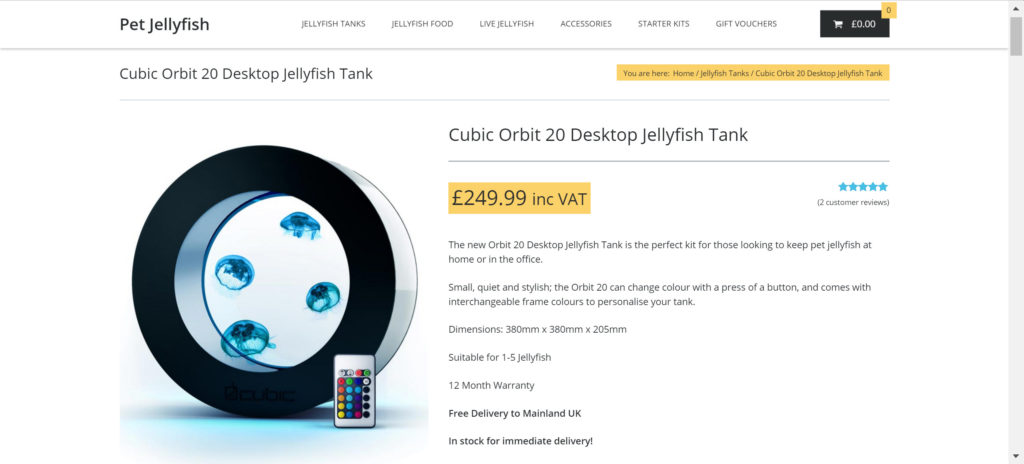

I’d imagine this pet jellyfish product page is great fun to promote for links…

I believe knowing your search result vertical at a product level and having a good understanding of the types of links required (and the types of links to definitely, definitively avoid) can make a big difference.

In many cases, you only need 2 or 3 good links for a serious hike in visibility. And remember if you’re still unsure that you can justify the time spent, that’s exactly what your competition would prefer you to think.

Perform product level keyword research and individually optimise meta data

I’ve spent a lot of time with successful small to SME retailers and a common theme amongst them all is an obsession with their products and the product’s presentation. This obsession definitely extends to an interest in optimising at a keyword level, matching the title and description found in the page’s meta data to the query.

If you can’t stomach the idea of managing a list of 10,000 products, break down your task by the top 20 in each of your sub-categories, prioritised by revenue.

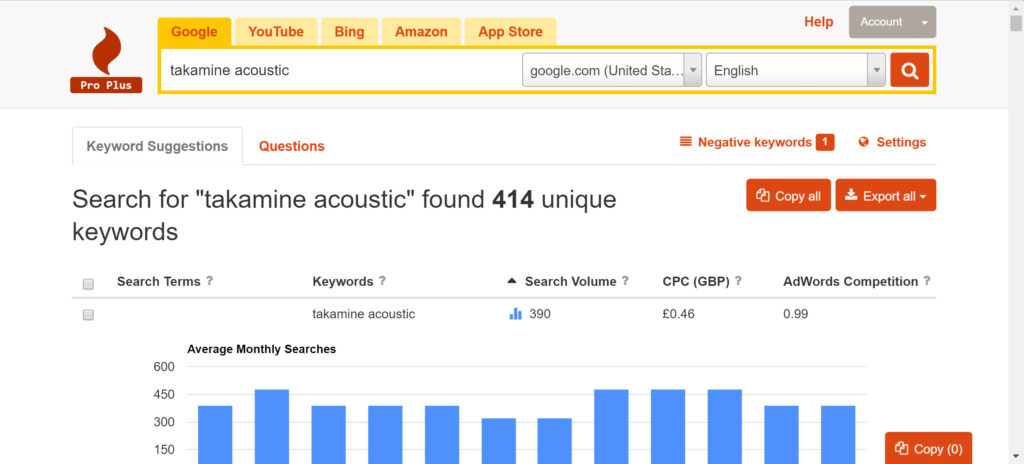

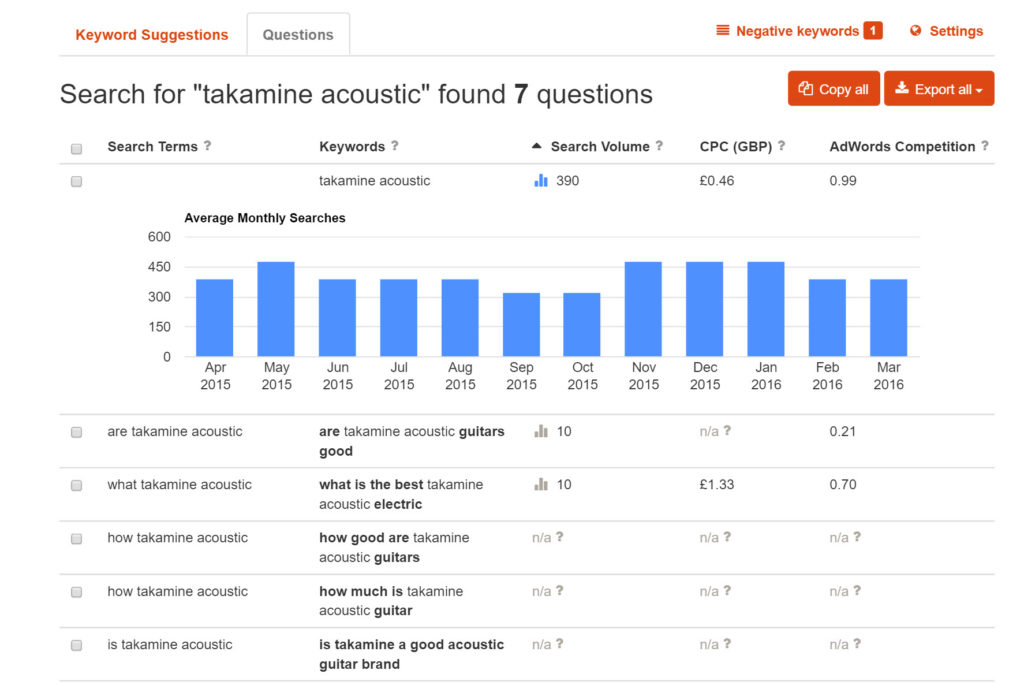

Keyword Tool Box is amazing for long tail keyword research for product pages.

I’m a big fan of Keywordtoolbox.io. It’s fast, great at expanding keywords with sortable search volume information. It also has a commonly asked questions section which you can use for an individual FAQ section in your product page copy.

You have to take time to be thorough in the pursuit of marginal gains. If you could increase clicks on your top 1,000 product pages by 5 to 10%, you’d do it, right?

You can even model out the proposed meta descriptions when you’re trying to convince your marketing department the complete product page optimisation process is worth pursuing:

My snippet includes a dynamic feature in the meta description: establish best delivery date and warranty, two key drivers in conversion in the music hardware industry. Made with Portent’s snippet preview tool

Kees Beckeringh

Hi Richard,

Great post. I can’t find the images in the XML sitemap’s of Amara though: https://www.amara.com/sitemap-products.xml.

Where did they list them? I’d love to see the example.

Richard Baxter

I wasn’t sure if they’d have listed the images in the xml sitemap or not; personally I think it’s an optimisation too far. Thanks for checking!

Aaron Bradley

Thanks for this excellent article Richard.

Further to your comments regarding the employment of JSON-LD, I’ll note that with their latest update to their structured data documentation (https://bit.ly/1URJYBn) following their “rich cards” announcement, Google has now explicitly confirmed that JSON-LD is supported for all public data types except breadcrumbs.

In fact, JSON-LD is now their preferred syntax for schema.org structured data, and they now “recommend using JSON-LD where possible.”

Richard Baxter

Thanks for following up Aaron!

Progetto

Very Nice Article!

‘Share this’ button is Not working in this page.

Richard Baxter

Yeah that’s ok, we’re a few weeks off releasing a new site so this one’s in code freeze, it won’t get fixed on this version :D

Joe

Where would you prioritize quality and volume of product page copy? I’m running into problems with a few clients not wanting much text on their product pages to optimize completely for CRO through ads and all other traffic.

I’ve tried explaining the importance of descriptive and engaging content that helps rank well for head and long tail queries, but I’m getting a lot of pushback on that. Any tips or links?