What is faceted navigation?

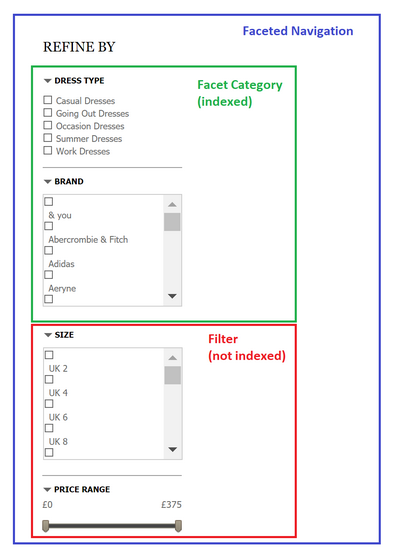

Faceted navigation is normally found in the sidebar of an ecommerce website and contains facets and filters. It allows users to select combinations of the attributes that are important to them, to filter a list of products down to the ones that match their needs.

Facets are indexed categories which help specify a product listing and act as an extension of the site’s main categories. Facets should add a unique value for every selection and, as they are indexed, every facet on a website should send relevancy signals to search engines by ensuring that all important attributes appear within the content of the page.

Filters are used to sort or narrow items within a listings page. Whereas these are necessary for the user, they don’t change the page content (it remains the same, just sorted in a different order), which leads to multiple URLs generating duplicate content issues.

What potential problems can it cause?

Because every possible combination of facets is typically a unique URL, faceted navigation can create a few problems for SEO:

Naïve faceted navigation

Leaving all facets and filters crawlable and indexable – even when the page content does not change – can lead to duplicate content issues, significant waste of crawl budget and link equity dilution. As the parameters multiply, the number of near-duplicate pages grows exponentially and links may be coming into all of the various versions, diluting link equity and limiting the page’s ability to rank organically. This also further increases the chance of keyword cannibalisation, a scenario in which multiple pages complete for the same keywords, resulting in less stable, and often lower, rankings.

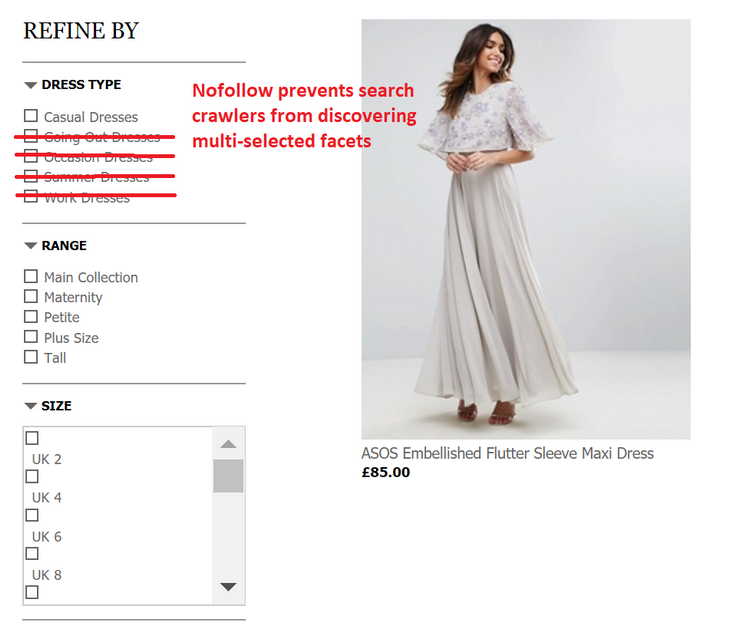

To make sure search engine bots are not wasting valuable craw budget on pages that are not adding any value, some rules will need to be created to limit crawling and indexing within filters. For example, determine which facets don’t have an SEO benefit (e.g., “size”, “price”) and blacklist them or prevent search crawlers from discovering multi-selected facets.

The hatchet approach

The opposite reaction to the above solution is to block crawling and indexation of every filtered page on the site, preventing it from ranking for a large set of relevant keywords.

Faceted Navigation solutions

When deciding on a faceted navigation solution, we will have to decide what we want in the index and increase the number of useful pages which get indexed while minimising the number of useless pages we don’t want indexed.

There are multiple solutions available to help deal with these issues, with each implementation having advantages and disadvantages:

Solution 1: AJAX

The main benefit of using AJAX for faceted navigation is that a new URL is not created when a user is on a page and applies a filter or sort order. The entire process happens client-side with JavaScript, without involving the web server at all.

When using this approach, you just need to make sure that there’s an HTML crawl path to the products and pages you would like to rank, and make sure that search engines can access every valuable page. By utilising the pushState method of the HTML5 history API, and configuring your server to respond to these requests with HTML rendered server-side, you can benefit from a fast, AJAX-powered faceted navigation without sacrificing the SEO-friendliness of your website.

This is a great approach in theory, as it can eliminate the issues of duplicate content, cannibalisation, and waste of crawl budget. However, it can’t be used as an ‘SEO patch’ for an existing faceted navigation. It also requires a large up-front investment in development time and a bulletproof execution.

If you have a question about AJAX-based faceted navigation, or JavaScript SEO in general, get in touch with us.

Solution 2: Meta Robots and Robots.txt

This is a reliable approach to blocking URLs that are created from faceted navigation as – even if they are directives rather than enforcements – search engine spiders usually obey them. The idea is to set a custom parameter to designate all the various combinations of filters and facets you want blocked (e.g. “noidx=1”), and then add it to the end of each URL string you want to block. Then, you can have an entry in your robots.txt file to block these out:User-agent: *

Disallow: /*noidx=1

You can also set all the pages you want to be blocked through a meta robots noindex tag in their headers. Please note that it’s recommended to allow 3 or 4 weeks for search engine bots to pick up these changes before blocking these with the robots.txt file.

Once meta robots and robots.txt blocking directives are in place, we will need to configure the server to automatically add the noidx= parameter to URLs with some rules: for example, when a maximum of facet groups are selected (to avoid indexing of too narrow categories like www.domain.com/skirts?color=black&size=10&material=leather), or when two or more filters within a group are selected (www.domain.com/skirts?color=black&color=white).

There are some important considerations to bear in mind when using this approach:

- In order to avoid duplicate content issues, every indexed page should have unique and optimised on-page elements (at least meta title, headings and meta description).

- Strict URL ordering will help you avoid duplicate content too by ordering attributes always in the same way, regardless of the order the user selected them.

- Remember to leave crawled and indexed one – the preferred – version of the content so search engines can visit and index it on their search results.

Solution 3: Directing your Indexation with Rel=canonical

This is a simple solution to help direct the robots toward the content you really want crawled while keeping the content that is helping users find products. Whilst the rel=canonical element will help you avoid duplicate content issues, this approach won’t save you any crawl budget (this can be achieved with the previous robots.txt solution).

Furthermore, canonical tags can often be ignored by search engines bots so you should use this in conjunction with another approach, to direct search engines toward the preferred – the highest converting – version of each page.

Solution 4: Google Search Console

Whereas this is a good method to create temporary fixes while building a better faceted navigation, it only tells Google how to crawl your site (rather than actually fixing the problem) and should be thought of as a last resort.

Through the URL Parameters tool in the console, you can indicate what effect each of your parameters has on the page (whether it changes its content or not) and how Google should treat these pages (remember that this will only instruct Googlebot and therefore won’t work for Bing or Yahoo user-agents).

Best Practices for Faceted Navigation

Here are some essential tips to help you make the most out of your faceted navigation:

- Prevent clickable links when no products exist for the category/filter

- Each page should link to its children and parent (commonly achieved with breadcrumb trails). Depending on your product, it may also be beneficial to include links to sibling pages.

- Strict URL facet ordering (attributes always ordered in the same way). Failing to do this can result in duplication issues.

- Allow for indexation of particular facet combinations with high-volume search traffic.

- Configure URL Parameters in Google Search Console (but remember not to rely on this solution)

- Don’t rely on noindex & nofollow (rel=nofollow and canonical don’t preserve crawl bandwidth).

- Nofollow after first filter in a group (alternatively, robots.txt disallow)

- Some parameters should never be indexable

Conclusion

I have outlined four different solutions to handle navigation through facets and filters, but every business is different and there isn’t one ‘best’ approach that will work for every website and platform. When deciding on the perfect solution for your business, remember that your category system and site architecture should reflect your customers’ needs. Happy customers and happy search engines are the keys to success in ecommerce navigation.

Finally, whilst an optimised faceted navigation can help your site rank for a wider set of terms, it also represents a high risk when not properly handled. It’s vital to test at every stage of development to make sure the system is correctly set up.

If you’ve got any questions, let me know in the comments, check out our ecommerce consulting section for more information, or drop us a line!

Richard Baxter

Hey Maria! Thank you for an excellent article, it’s really nice to see an important architecture best practice covered on BV again.

Some observations on this topic from me; I’ve noticed that the complexity of an ecommerce site’s facted nav can be a big contributor to bloated page load times. Reducing the number of attributes products and categories have can reduce the potential for duplicate content and speed up the site.

Often it’s useful to have a chat with the database engineers behind the site too. They might be able to help optimise in this area.

Also I wrote a guide to using wildcards in robots.txt some time ago which (I think!) is still upto date – might be a useful next step for some of our readers.

Great stuff as always!

Ajit Kular

This is really great. You have covered all points in this article. I just like to mention that if Store owner is blocking layered navigation filters via Noindex tag then one should remove the canonical tag too. Otherwise, it would confuse the Search Engines.

I think this is one of the best articles that I ever read about Ecommerce Category Navigation filters. Must do point for Ecommerce Store owners.

Jan-Willem Bobbink

How sure are you Google is not able to process and run AJAX JavaScript?

Anton P.

This article is really very informative, and has become useful to me. Right now I faced the question of Faceted navigation optimization. Thank you!

Mobius

Thanks for an insightful post. So many amazing ideas I had never even considered. I LOVE ARTICLES LIKE THIS THAT REALLY MAKE YOU THINK.

Question though — Have you tried adding the current year in your title? If so, is there data on how that performs in terms of behavior signals for SEO?

Thanks again, this was great.

Maria Camanes

Hi Dave,

The method is suitable for preventing crawling; however, those URLs might still be indexed (if they have external backlinks, for example) so they will still need to be noindexed.

Maria Camanes

Hi Mobius,

Unfortunately, we don’t have any data for adding the current year in titles yet, but that’d definitely make a great case study!

James

A brilliant read for those starting out. It is certainly true that having an internal link strategy is of great importance.

Nick Eubanks

I would add an additional solution: Custom built ruleset that allows for facets to be representative of product attributes *with* search volume and therefore builds them out as child directories off a parent category, based on user-set rules regardless of user selection order. With 3 levels of URL impact; tiered, parameterized, or hidden (via AJAX); where parameters exist to support the need to be linked (think email, remarketing, chat, etc.) but can then be abstracted/blocked via robots.

Dave

Hi Maria, great article. We’ve long needed a comprehensive guide to faceted navigation best practices.

One question, I’m not clear on what you’re saying about nofollowing facet links. It’s a method I’ve used in the past. Are you saying it is a suitable solution for preventing crawling of facets with no SEO benefit, or unsuitable?

Richard Hearne

One thing that comes to mind here is that some of the suggested fixes may have very different outcomes depending on whether Google has already crawled/indexed facet URLs. The NOFOLLOW attribute on facet links sounds nice, but might not be appropriate if Google has already indexed a large number of the target URLs. In that case canonicalisation might be a better solution. I do think the NOFOLLOW will work nicely if it’s in place from launch, but it’s far less ideal to implement later if it creates a massive number of orphans.

Another great way to handle this is to put your facet into URL fragments # and let JS load the page based on the data in the fragment. Currently Googlebot will not index the fragment, so it’s quite an elegant solution.