Why does a website need an error-free sitemap.xml file?

The advice we always hear from Google is: keep your sitemaps validated and as error-free, as you can. The sitemap file is used to declare the preferred canonical URL.

As a signal, an error in a sitemap file is a pretty big deal and best to be avoided.

I’ve always felt that a sitemap file with a very low load time is also advisable if you can speed up the dynamic elements of the file generation. I’ve come across very large sitemaps that are clearly regenerated on every request without any caching. Check this carefully.

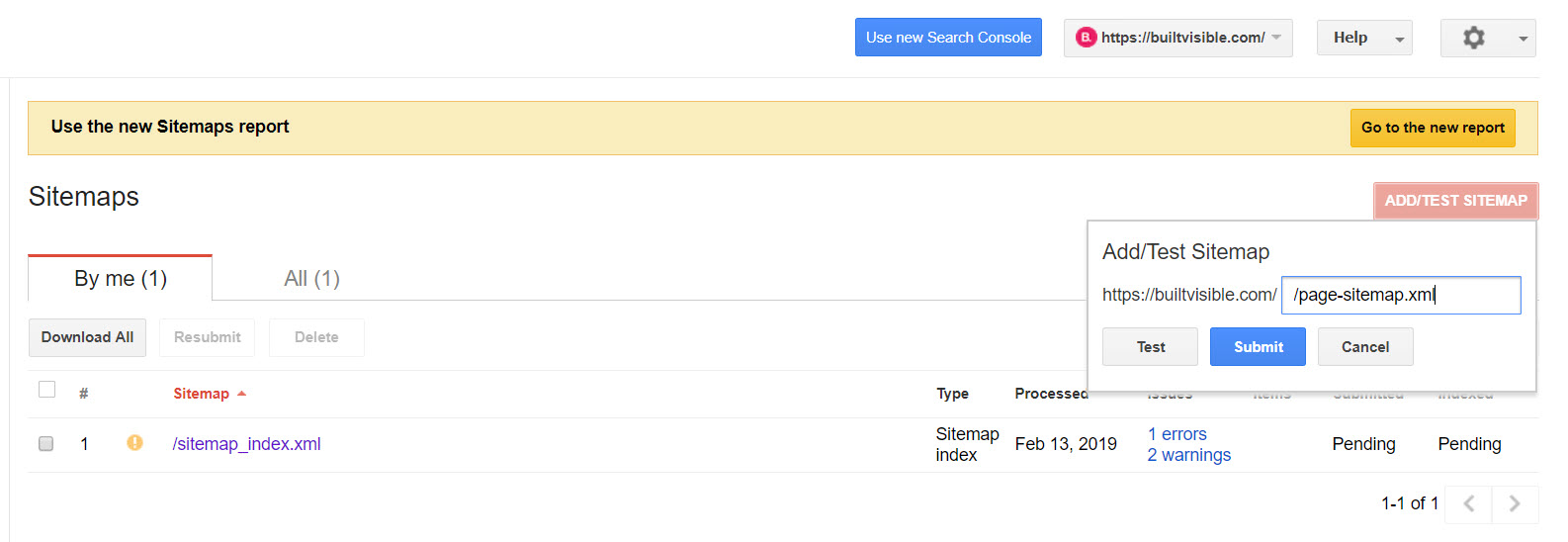

Adding and verifying a new sitemap in the (old) Search Console.

The previous version of Google’s Search Console still offers the better functionality, compared to the new version which is currently extremely limited:

I’ll update this article should the new functionality develop, which I’m quite sure it will.

Configure Screamingfrog to only crawl the URLs found in the XML sitemap

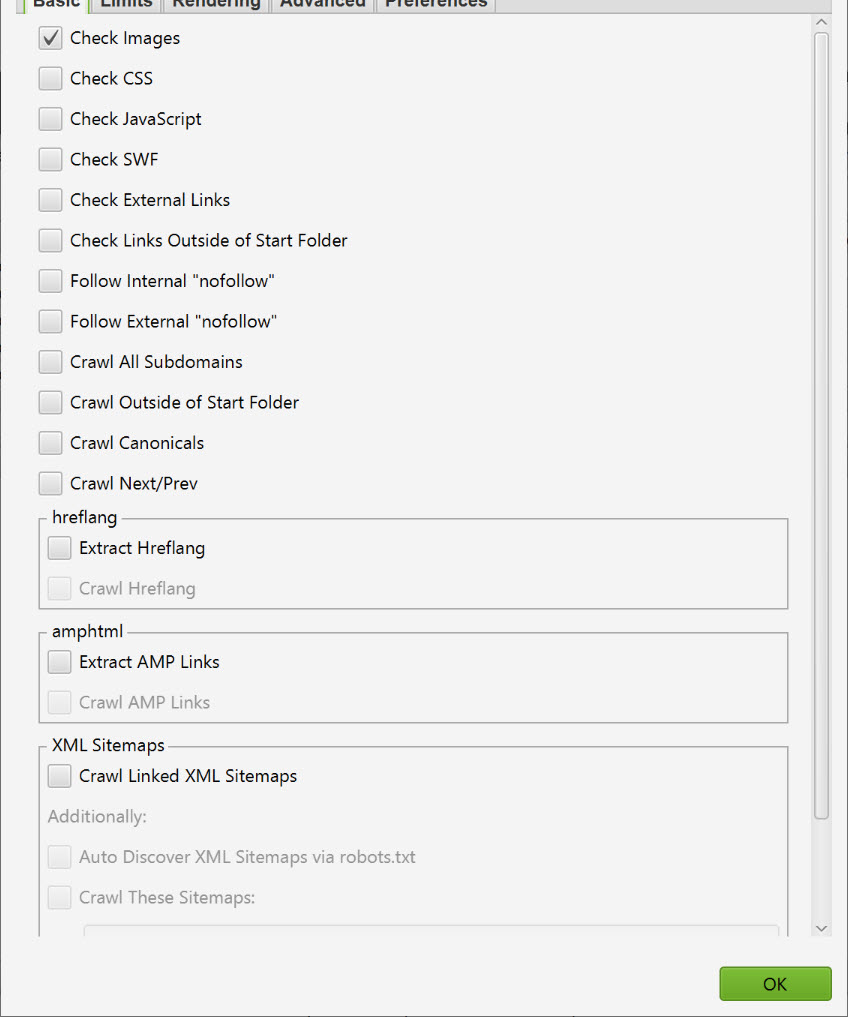

Screaming Frog is a very curious little site crawler. The default configuration, even in list mode will crawl a lot more resource than perhaps you might want. Here’s how to configure the crawler:

Head to Configure > Spider and deselect everything from the “Basic” tab. This will prevent Frog from discovering new URLs or unnecessarily crawling resources that aren’t useful for this project.

One the configuration is done, we’re ready. Here’s a summary of the process I’m going to teach you today:

How to check your XML Sitemap for errors with Screaming Frog

- Open Screamingfrog and select “List Mode”

- Grab the URL of your sitemap.xml file

- Head to Upload > Download Sitemap

- Frog will confirm the URLs found in the sitemap file

- Click Start to start crawling

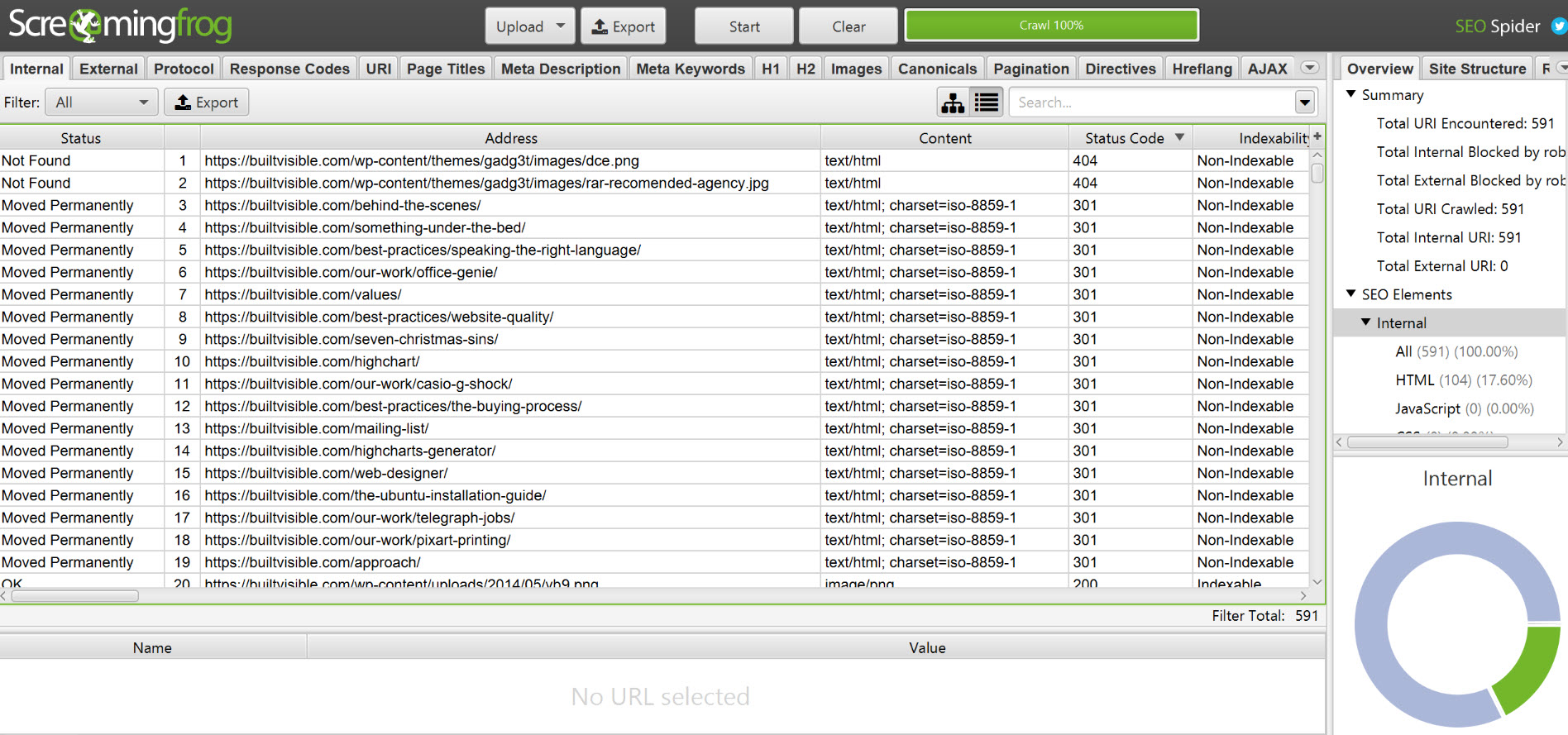

- Export the data to CSV or sort by Status Code to highlight any potential issues

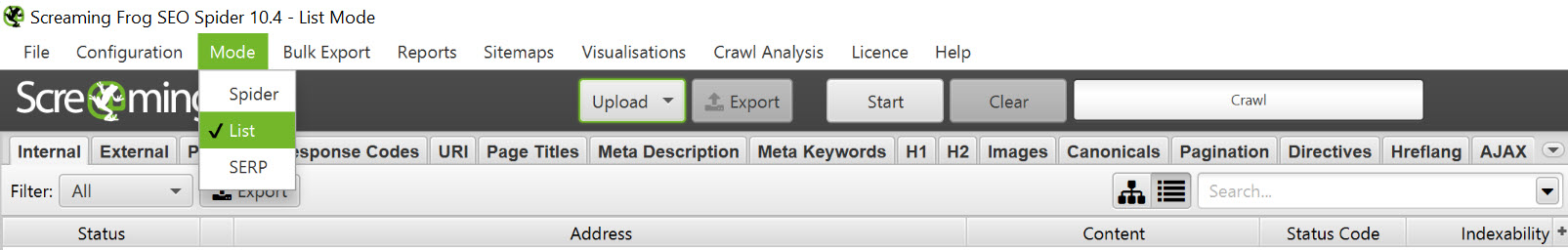

Open Screamingfrog and select “List Mode”

Open Screamingfrog and select “List” via the “Mode” drop down menu:

Now go and fetch the sitemap.xml URL.

Grab the URL of your sitemap.xml file

First, start by finding your XML Sitemap URL. If it’s your website, obviously you’ll just know this. It’s either found at “/sitemap.xml” or “/sitemap_index.xml”.

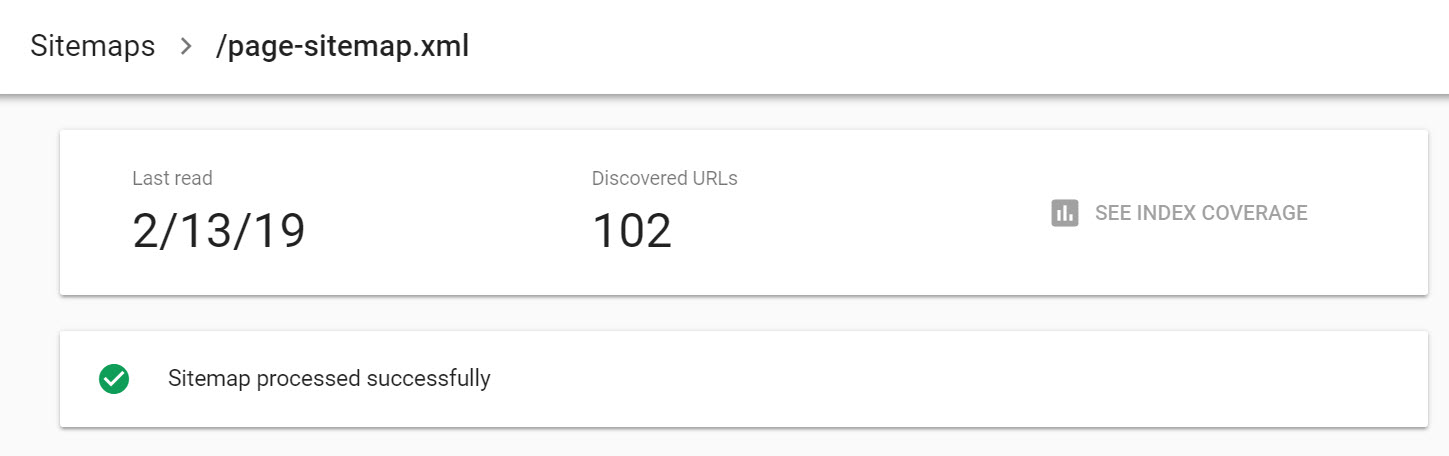

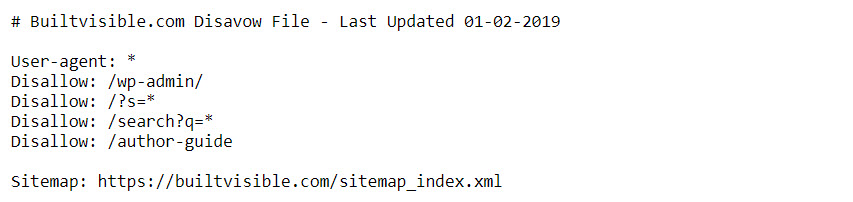

It should also be available in the sitemaps report in Search Console or visible in your robots.txt file:

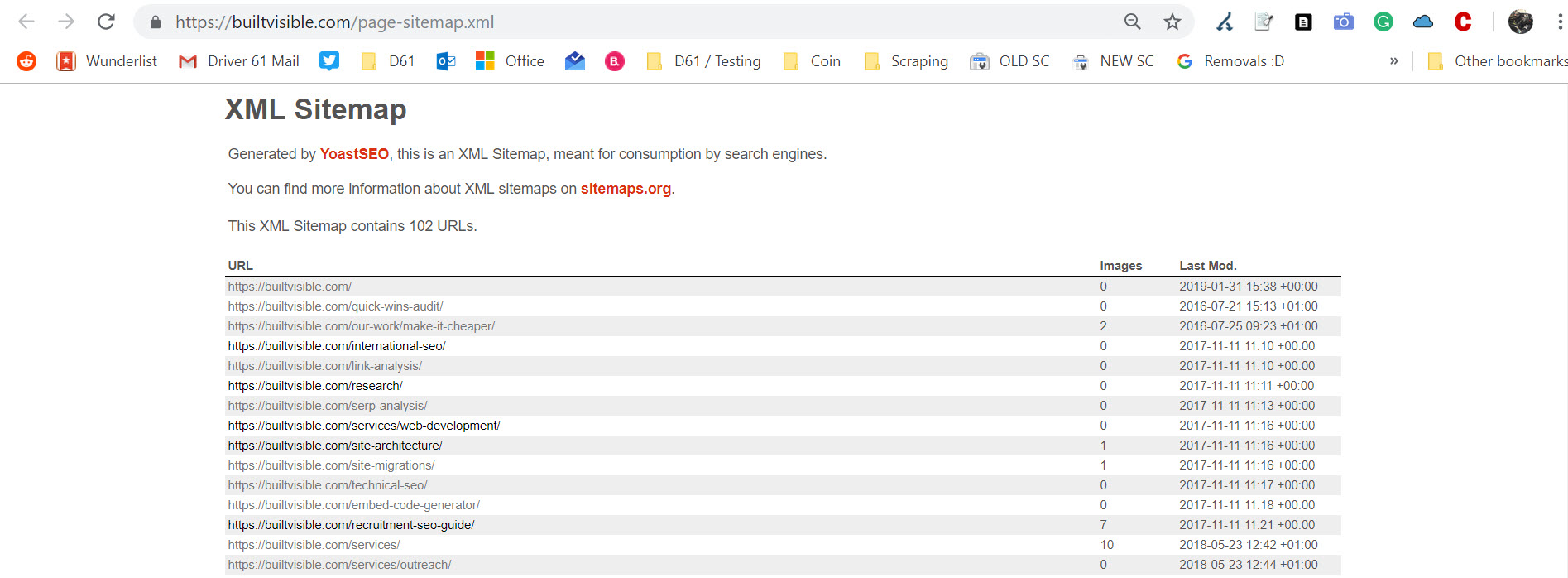

You can visit the sitemap URL in your browser. Here’s how Builtvisible’s sitemap.xml file looks, generated by the Yoast SEO plugin:

Copy the URL head to Screamingfrog.

Head to Upload > Download Sitemap

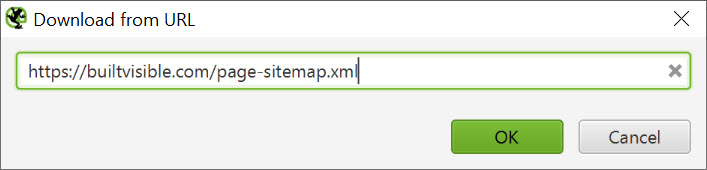

Head to Upload > Download Sitemap and paste the URL in the dialogue window:

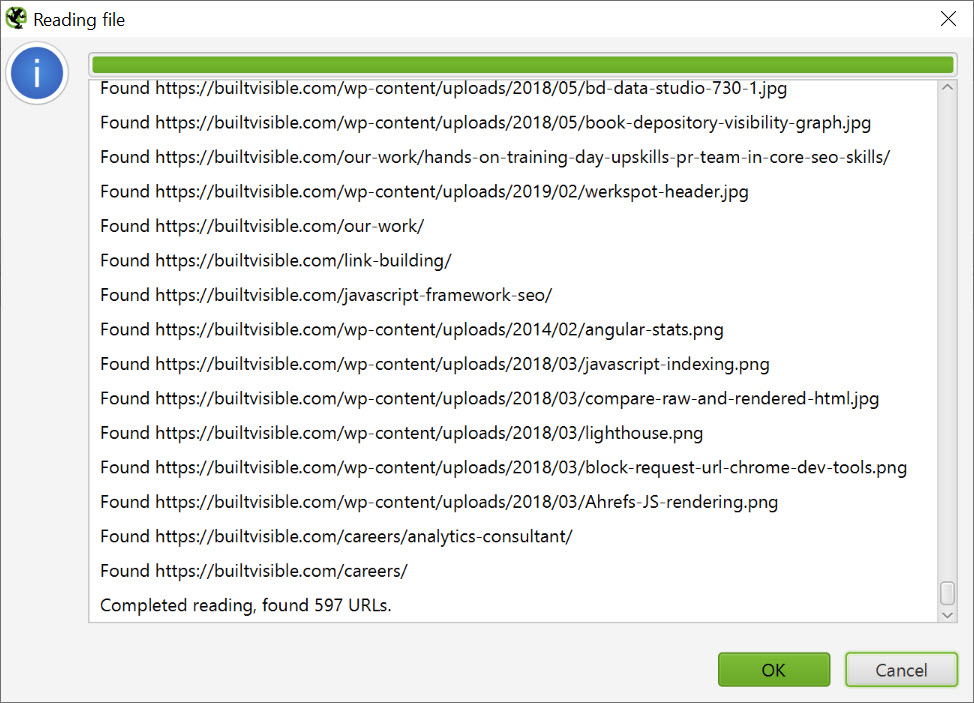

Frog will confirm the URLs found in the sitemap file via the next dialogue window:

Click “OK” and the crawler will automatically run.

Once the crawl is complete, you can export the data to CSV or sort by Status Code to highlight any potential issues. You don’t want 301 redirects or 404 errors – go ahead and resolve those problems!