So, what are the different classes of user intent?

Google has employees dedicated to conducting a series of searches and then reporting back whether the results make sense to them. They follow the Quality Raters Guidelines, which are heavily focused on user intent and classify it as either: Know query, Do query, Website query, or Visit-in-person query.

However, in SEO, intent classes are defined by us, the industry. Many organisations have original approaches and define intents based on expertise and specialisation. Nonetheless, there are 4 classes that are commonly quoted and repeated in most articles, blogs, and technologies:

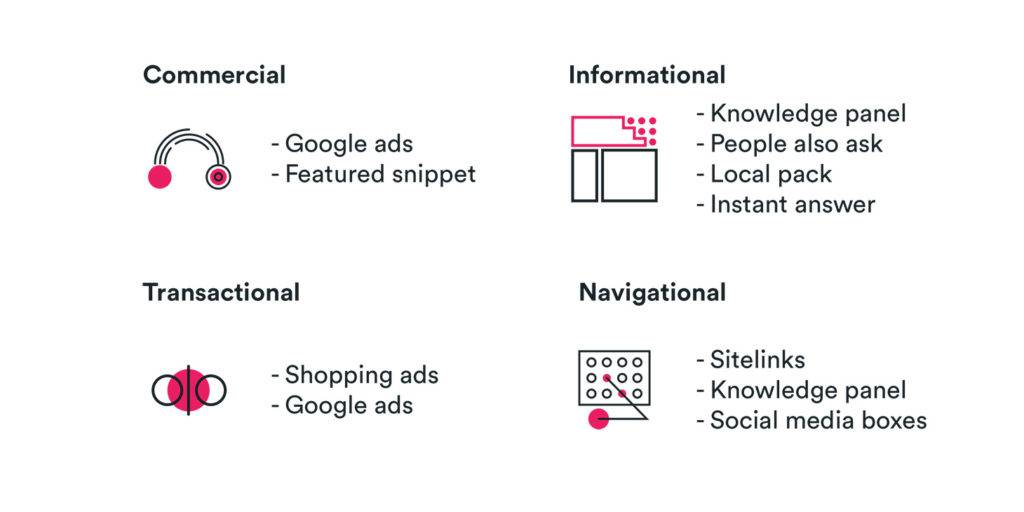

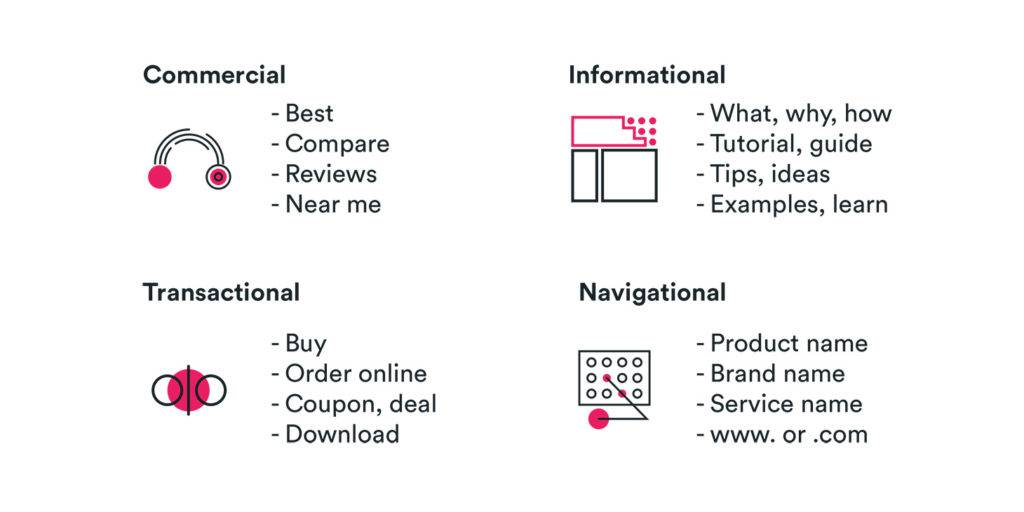

- Navigational intent: Searching to find something specific (e.g., “Tesla website”)

- Informational intent: Searching to learn something (e.g., “Who is the CEO of Tesla?”)

- Transactional intent: Searching to complete a purchase (e.g., “buy Tesla model 3”)

- Commercial intent: Searching to learn more before a purchasing decision (e.g. “Best electric cars in 2022”)

The challenge with existing providers

There are several providers out there that can report search intent, Searchmetrics, SEMrush, and MOZ, are some of them. However, in each of them intent recognition is a premium service that bills for each API call. Looking at the volume of our existing clients’ traffic and the growth we are experiencing we concluded that this option was no longer suitable -the cost would simply outstrip the value generated. So, with innovation at our core, we set out to build our own intent model from scratch!

The first problem we faced was finding an appropriate dataset. We needed at least 100k high volume queries, each with a labelled intent. Unfortunately, no such dataset is available for free or even under licence. We needed to create our own. Finding 100k top queries was the easy part. There are, in fact, a few large datasets containing millions of search engine queries, the most famous ones being Yahoo Weboscope, Yandex Datasets, AOL Query Logs, or MSN Query Logs.

The hard part was labelling each of the 100k queries – each one unique. In an ideal world with infinite resources, we would employ an army of ‘labellers’ that would, very much like Google’s quality raters, search each unique term one by one; look at the SERP results and assign one of the pre-defined labels. Obviously, this approach is unviable for everyone but the largest tech companies. So, what did we do?

We ran a series of API calls requesting the 100k intents from large SEO services, five in total. We then found the mode – the most common intent amongst the five – and assigned that as the correct label. The biggest constraint in this approach is that the labels themselves were designated by the providers, so we couldn’t introduce custom intents like ‘local’. We were limited to the 4 classes mentioned before: Navigational, Informational, Commercial, and Transactional. Nonetheless, this approach was successful, cost effective, and relatively easy to implement.

The next step was to find the SERP features for each query. These features are critical to understand Google’s assumed intent and are the cornerstone for our model. There are many providers that can extract SERP features, at cost, for any query : Ahrefs, SEMrush, SERPWoW, are some examples. Using a new API call we extracted the complete set of SERP features for our dataset. In total, across all 100k queries, we found 143 unique features!

Our Solution

We have our complete dataset, 100k unique queries each with their SERP features extracted and a defined intent label. Now what?

Well, we started with the most important skill in a data scientist’s arsenal, data exploration. Almost immediately we found that the intent classes were heavily imbalanced, 70% of all intents were informational while only 4% were transactional. Class imbalance such as this is a big problem as most machine learning models would not be able to converge and thus return poor results. I will not bother you with the specifics but applying a combination of oversampling and under-sampling methods we balanced the intents and were able to proceed.

With the classes balanced we then designed a multi-layer deep network that used SERP features as input and intent labels as output. Unfortunately, this model plateaued at 85% accuracy, not satisfactory by any means. We needed to create an ensemble of models; we needed the help of NLP.

The first step was to fine-tune an existing NLP model to suit our needs, that is intent recognition. Thankfully, there are several pre-trained NLP models available at Hugging Face. After selecting the best fit and tuning it using our dataset, the NLP model was able to find intent with 76% accuracy.

These 2 models alone were still not enough to create a sufficiently accurate ensemble so we set out to create several more models, some based on SERP features, others on NLP; each based on a different sampling technique or a pre-trained NLP model. Finally, applying a weighted average on the results of all models we created an ensemble that showed incredibly exciting results.

The outcome: BVIntent

The final model, aptly named BVIntent, shows better results than any single SEO provider. The recognised intents are not only more accurate, but by having control over the entire process we can identify queries for which the model is not confident enough (less than 50% confidence in the top intent) or queries that are multi-intent (more than 35% confidence on the second top intent).

BVIntent allows us, amongst other valuable benefits, to create an intent map for our clients. By looking at client search queries across time we can uncover the most important, the fastest growing, and the top revenue generating intents for their users. Knowing intent at such a deep level allows functional teams, from PR to link-building, to optimise SEO actions, create a better SEO strategy, and ultimately deliver more value for the brand.

BVIntent is used across internal teams to test new content, optimise meta-tags, prioritise link-building partners, and more. This tool has indeed supercharged our SEO capabilities. We are not only able to identify intent but we can also predict future behaviour for a cohort of users. We can uncover signals that point to an increase in ‘transactional’ traffic. We can quantify the value of your brand by looking at how many users reach you directly (‘navigational’ intent) at a level of clarity beyond simply looking at brand terms.

In conclusion, BVIntent delivers pin-point accurate intent data to our experts, who then use this information to make better, data-driven decisions, in their SEO work.

Curious to learn about the intent of your users? Ever wondered ‘why’ people come to your site? Are they here to learn? To buy? To compare? How has your user intent changed over time? And how can you predict it and plan new content accordingly?

If you are curious and want answers to these questions and more, just drop us a line. Together we can build a world-class SEO strategy that not only uses established best practices but is also empowered by state-of-the-art intent analysis.