A brief history of NLP

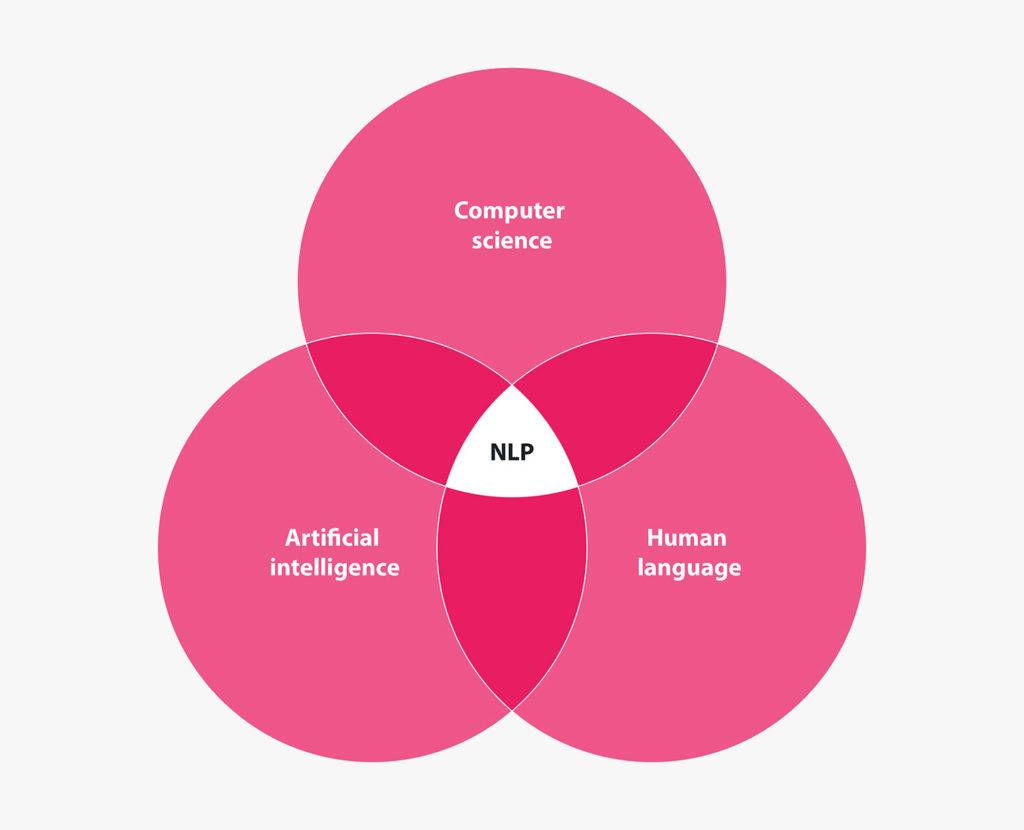

Natural Language Processing (NLP) has been around since the end of World War II, some 75 years ago. Back then, with the advent of basic computing machines, researchers across the world started playing with the idea of a machine that could translate language automatically. The field of NLP was born.

Back then the best supercomputers had less processing power than a modern e-scooter. It is easy to understand why no big breakthroughs happened in this era. Many researchers thought NLP was a dead end and left to pursue other interests.

Fast forward to the 2010s, quickly skipping all side content until we reach the crown jewel that allowed modern NLP to be the powerhouse it is today. Somewhere in this decade deep learning (DL) broke through as a new approach in machine learning and took the world by storm. The reason? Well… DL has a unique capacity to create inferences and find relations across any form of data, be it images, video, text, or else.

Specifically for text, we process language while keeping memories that are related to the activity at hand. For example, as you read through this blog, you are processing it word by word while keeping memories of what you have read in earlier sections that help you understand and stay in context.

Modern NLP models go around this memory problem by applying a specific type of DL technology known as a Recurrent Neural Network (RNN). Without going into all the complexities, an RNN is a special type of network specially designed to incorporate dependencies in data.

Just like the humans they’re working for, these networks go through sequences of data and maintain a memory state relative to what it has seen before. This memory trait in RNNs allows the model to adjust for context, but it is still missing something — a sort of magical, cognition pizzazz that brings it all together, in NLP we call that attention.

Without an attention mechanism that highlights important information and discards the rest, NLP models would have to remember every sentence ever written to understand context. Models without attention are inefficient because they ‘read’ linearly, keeping in memory the full sequence of text. They are too resource demanding, even for modern computers.

The attention mechanism in NLPs introduces a series of hidden steps that record and memorise context by segment. It can identify, prioritise, and memorise ‘high value’ segments that give meaning to the whole text, while discarding the rest. With attention modern models can now understand tone, intention, meaning, and more, they can even understand (and dish out) sarcasm.

State of the art models

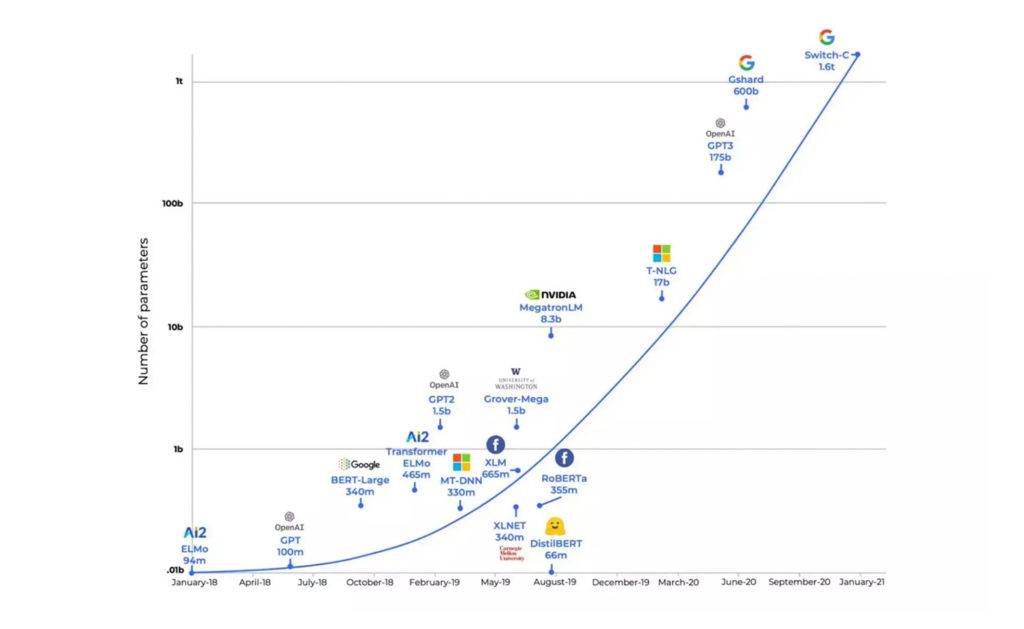

The first fully fledged attentive NLP model is known as BERT (Bidirectional Encoder Representations from Transformers). BERT was created and published in 2018 by Jacob Devlin and his colleagues from Google. In 2019, Google announced that it had begun using BERT in its search engine, and by late 2020 it was using it in almost every global query.

BERT was trained on 110 million parameters. NLP models with large numbers of parameters (values to optimise) acquire a richer, more nuanced understanding of language. Google later updated the model to BERTLarge, trained on 340 million parameters. BERTLarge is to this day the model used in Google search (but I suspect that Google will move on soon, more on this later).

Two years later, in 2020, OpenAI released its third version of Generative Pre-trained Transformer, known as GPT-3. This model was trained on a mind-boggling 175 billion (yeah, with a B!) parameters. It would take 355 years to train GPT-3 on a single home computer. In an initial experiment, 80 people were asked to judge if short articles (200 words) were written by humans or GPT-3. The participants judged incorrectly 48% of the time, only a bit better than random guessing. Scary.

Just last year in 2021 Microsoft announced the Megatron-Turing NLG and Google did the same for their Switch-C model. These models are absurdly large, Microsoft’s has 530 billion parameters while Google’s has 1.6 trillion. I suspect that sometime in the near future Google will start migrating its search queries from BERT to Switch-C (or PaLM, a 540bn model that is much faster at similar performance level released in 2022).

Looking at the exponential growth in NLP models it is not crazy to speculate that in the near future some tech giant will announce a 60 trillion parameter model. A seemingly random number I picked because it is the estimated number of parameters in the human brain — theoretically, a model of this scale could interpret text to the same standard as the best human reader.

NLP in SEO

So why should SEOs care? Modern NLP models are so powerful they can solve a wide array of language problems. Question answering, code completion, text summarization, language translation, reading comprehension, and sentiment analysis are some of the most common applications.

Specifically for SEO I want to expand on the two main applications we use at Builtvisible – there are several others but more on that in part 2…

The first is known as Zero-Shot Classification, the second one is simply known as Text Generation (you may often see this referenced as NLG or Natural Language Generation).

Zero-Shot

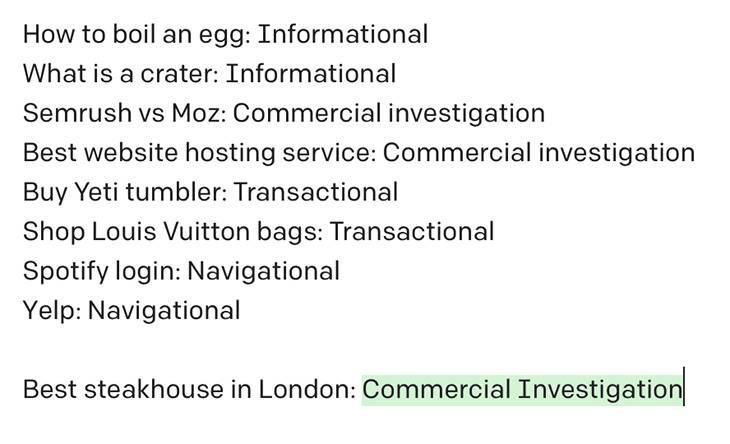

Satisfying search intent is the primary goal for Google, which in turn makes it a primary goal for SEOs. Putting it simply, if you want to rank high in Google, you have to offer content that best fits the users’ search intent. While there are endless search terms, there are just four primary search intents:

- Informational: When users are looking to gain knowledge. It could be in the form of a how-to guide, a recipe, an interesting statistic, or a definition.

- Commercial Investigation: When users are looking for a product but are not quite ready to make a purchase. They are looking at comparisons, reviews, or unique features.

- Transactional: When users have a clear product in mind, they have a good idea of what they’re looking for. Since the user is already in buying mode, these terms are usually branded.

- Navigational: When users are looking for a specific website. They already know where they want to go. They just do not know how to get there.

Zero-shot classification models allow us to find the intent behind any piece of text, from the algorithm’s perspective – whether it is finding the general intent from a whole blog post (like this one), the intent of a single keyword, or the high value keywords from a whole piece of content.

We use this tool daily to optimise content and understand what Google search ‘reads’ from our writing. For example, and as a performance marketting agency this happens to us quite a bit, it could be that an informational piece also has a strong commercial investigation intent. If Google directs users to this content when they are looking to compare products (the wrong intent); they will leave quickly, increasing bounce rates and dropping our precious ranking.

Key Takeaway

Zero-shot models do intent recognition like no other. They help SEOs to understand, optimise, and match content to the perspective of Google’s NLP search algorithm.

Text Generation

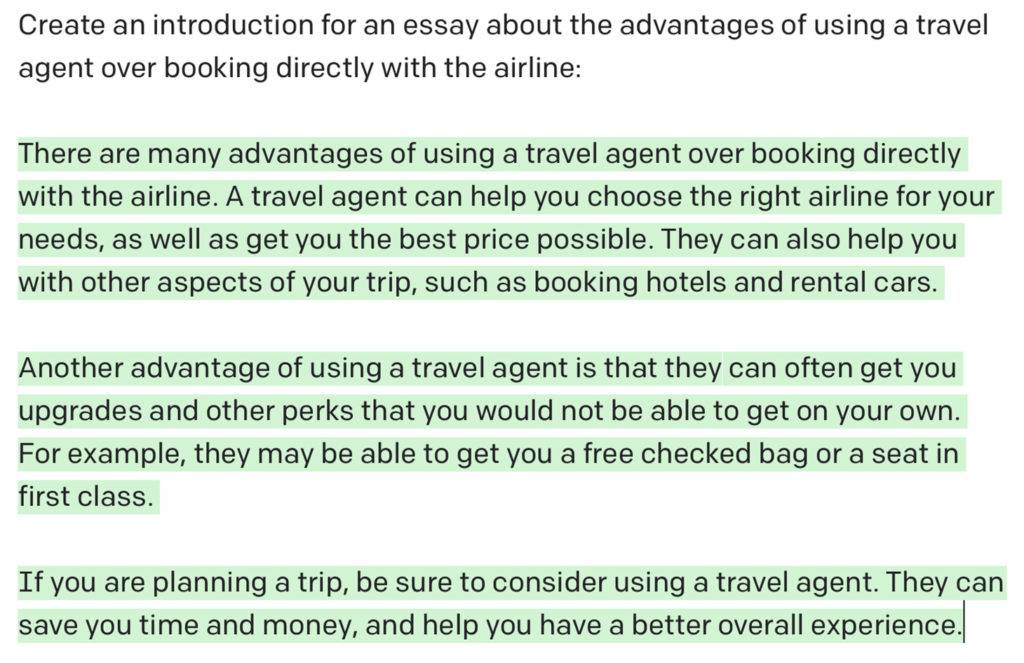

This one almost speaks for itself. Models trained in text gen empower our SEO content teams daily. Text gen has more than a few pitfalls (which will be expanded upon in the follow-up post) and as such it is not the ultimate solution. As my colleague Emily says, It cannot replace human content creation. Instead, we use NLP text gen as a clever tool in unique workflows where we collaboratively work with machines to create valuable and optimal content. She’s also evaluated some of the current NLG tools on the market in her post here.

The unique strength of text gen models is not that they are better than humans at writing, but that they are in fact, fast, cheap, and empower our already excellent copy team to reach new heights. So, specifically, where do we use text gen at Builtvisible?

- FAQ generation: Use NLP to find the most relevant questions for a given piece of content and then pass these to humans who write the answers.

- Product description: Use NLP to generate descriptions for products or categories of products before human proofreading.

- Intro draft: Use NLP to generate an intro for a new piece of content based on similar pieces using selected keywords.

- Rewriting: Use NLP to rewrite content into more appealing versions. For example, rewriting dry bullet points into engaging text that helps potential customers better understand a product.

Key Takeaway

Text Generation models alone are unfit for SEO. Yet, in the hands of a great content team supported by NLP experts they can become a strong tool that empowers a wide range of workflows.

As you can see, the field of NLP is incredibly complex but, as a core component of modern search algorithms, it sits at the very heart of good SEO. A quality NLP model in the hands of an expert consultant, can massively help you navigate this complex arena.

The work we do with NLP at Builtvisible is rather unique in the SEO industry; we blend and match machines with humans to better signpost both content and intent. This is only possible because of our expertise across technical SEO, content, and data.

If this sparks your interest, let’s have a conversation. Otherwise sit tight and stay tuned, in the coming months we’ll look a little closer at each of these use cases for NLP.