Downloading and installing Anaconda

Download and install the latest Anaconda package for your operating system. It will install most of what you’ll need including:

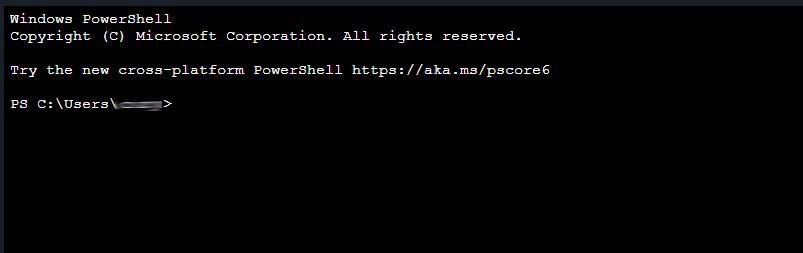

- Command prompt for executing your script and installing needed libraries (Anaconda prompt). We’ll use the built-in Powershell command prompt in this tutorial to make it even simpler.

- Jupyter Notebook, which will make creating this script even easier.

- Python 3.x (latest version).

During the installation process, follow the installation guide. You don’t need to change any of the preselected values unless you know what they are and want to customise them.

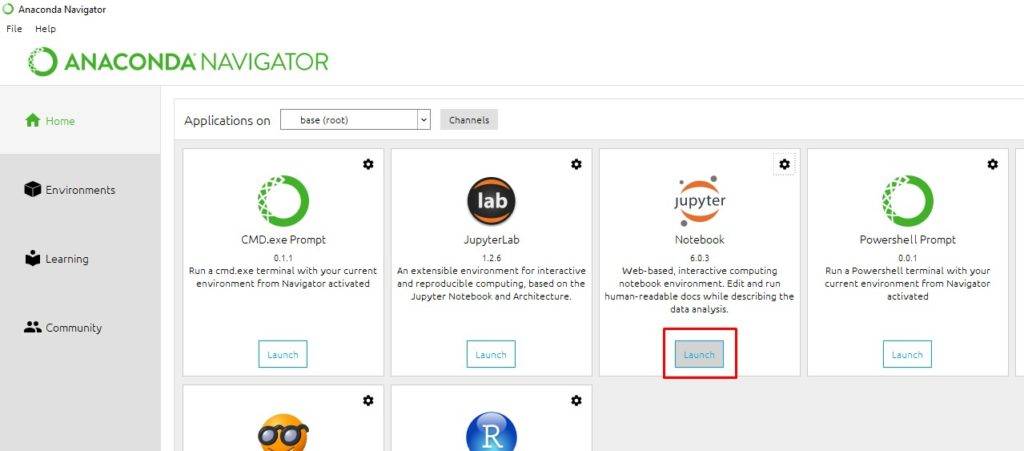

Go ahead and find “Anaconda Navigator” in your start menu. When opened, you’ll see all the software installed with the package. Let’s open the one we’ll be using – Jupyter Notebook.

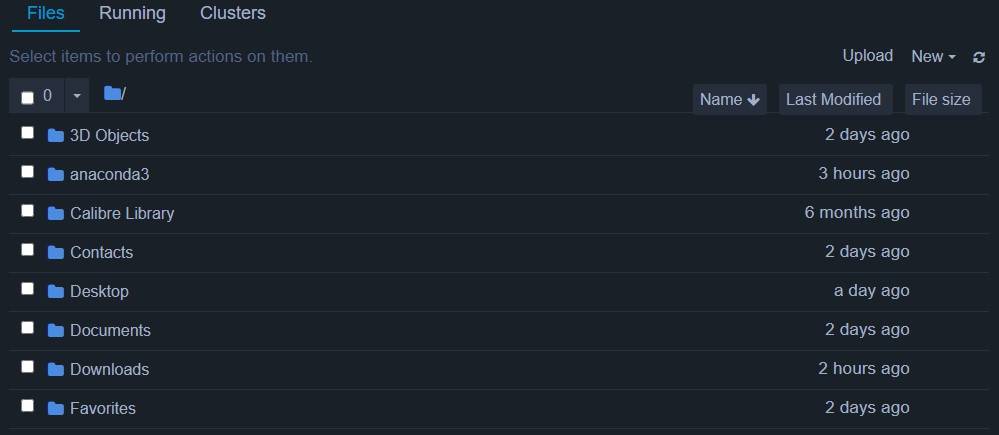

Jupyter interface will open with a similar view to this:

Note: if you already have Anaconda installed on your machine, be sure to update first to the latest version.

Preparing the environment

First, we’ll need to install a couple of libraries we’ll use in the script we’re about to write. Don’t worry, this process will be quite simple, and you don’t need to know how they work. Just enter (or copy/paste) the commands below:

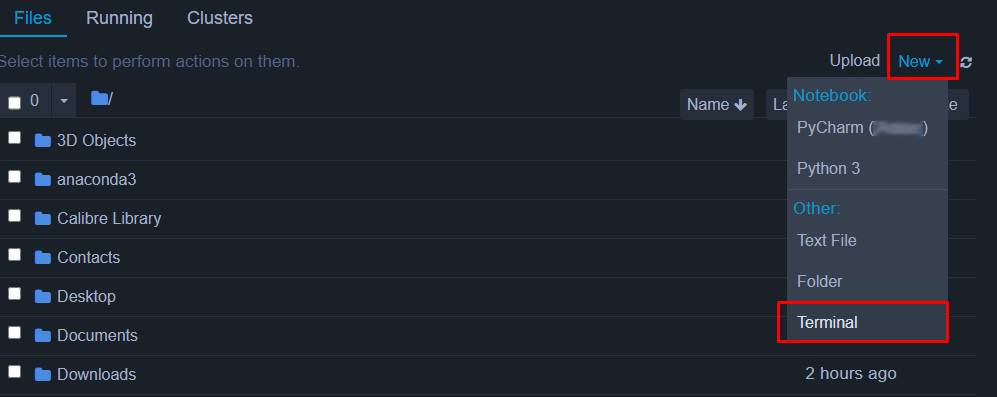

1. On the top right hand, click “New” and then “Terminal.”

2. The terminal window will open in your browser.

3. To check if Python installation was successful, type the following and hit “Enter”

python --version4. If nothing happens, you don’t have Python installed. Try and uninstall/reinstall Anaconda. Otherwise, you’ll see something like this:

$ python --version

Python 3.7.05. Let’s install the first package called “pandas.” In the terminal, type (or paste):

conda install pandas6. Then hit “Enter”. When asked if you want to proceed, type “y” and hit “Enter” again; it should take a couple of seconds to finish.

Note: if the command doesn’t work, you can try replacing it with:

pip install pandasTo access Google Search Console API, I’m using this brilliant wrapper created by Josh Carty and accessible on GitHub.

7. To be able to install from the GitHub, we’ll first need to install it on our machine, so go ahead and type in your Terminal:

conda install git8. To install the wrapper from GitHub, in your Terminal type:

pip install git+https://github.com/joshcarty/google-searchconsoleThis is all we’ll need to install for this project.

Getting access to GSC API

To access the Google Search Console API (GSC API), you’ll need to create the credentials in the Google Developer Console. As it’s quite a detailed process, I could not do a better job than an awesome SEO, Jean-Christophe-Chouinard. Please follow the instructions on how to do that in his guide.

Important: the user interface in Developer Console changes with time, so the location of navigation elements will change; however, it’s essential that you follow the steps in the order they are presented in the link above. This step is the most prone to errors in the future. If you cannot find “Other” as application type, you can use “Desktop”, as it works, too.

Important: please note that you should be logged into the Google Developer Console with the same Google Account you’ll want to use to access Google Search Console.

When done, download and save a JSON copy of your “client secrets” file from the Google Developers Console. It’s also a good idea to keep a copy of this file somewhere for safekeeping on your hard drive as you can reuse it for all your work with GSC API in the future.

Let’s get coding!

1. Open a new notebook

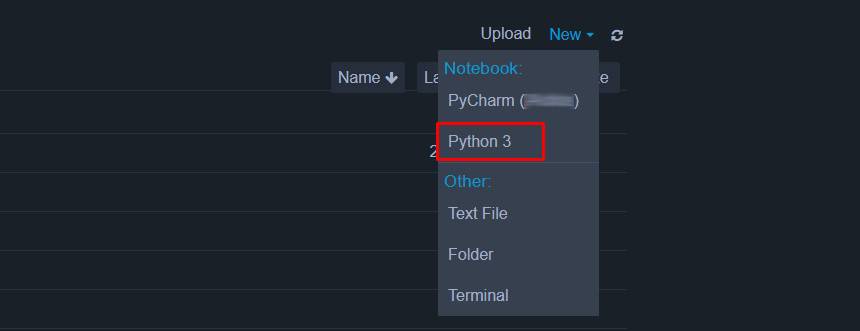

First, create the folder for your project somewhere on your hard drive. In your Jupyter Notebook interface, navigate to the folder you want to start working in (important!) and create a new Python 3 notebook.

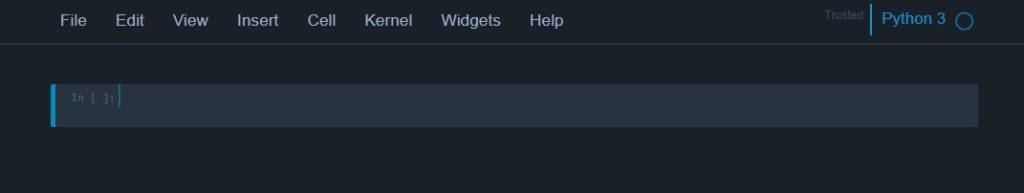

You’ll be greeted with a simple interface with only one line active.

We’ll be using this interface to create the code for extracting GSC data.

While we’re at it, drop a copy of your “client secrets” file into the same folder and rename it “client_secrets.json”. We’ll need this later.

2. Enable needed modules

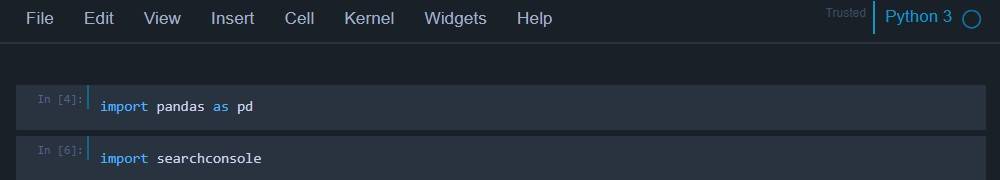

At this stage, we need to enable the modules we downloaded earlier. To do this, type the below and press shift+enter to execute:

import pandas as pdimport searchconsoleYou should see something like this:

Tip:

Press “shift+enter” = execute and create a new line in the notebook.

Press “ctrl+enter” = execute the line in the notebook (no new line will be created).

3. Authenticate the API access

Even though authentication could be done every single time you access the GSC API, in this guide I’ll show you how to simplify the authentication process so that you don’t have to login every time. The next step will be to stop a re-login prompt appearing every time you run the script. I strongly recommend following it to create a reusable credentials.json file:

3.1 Drop your renamed client_secrets.json file into the same folder you’re running the notebook from (if you haven’t done it already) and type/copy paste the following:

account=searchconsole.authenticate(client_config='client_secrets.json', serialize='credentials.json')3.2 You will be prompted to log in to the same account you have created the client_secrets.json with. Authorise the access. This command will create a credentials.json file, which you will be using in the future together with client_secrets.json to avoid re-login prompts every time you need to access the API.

This step will make your life easier: every single time after this, when you create a new GSC API export, use this line instead:

account=searchconsole.authenticate(client_config='client_secrets.json', credentials='credentials.json')Note: you’ll need to keep the copy of both client_secrets.json and credentials.json in the project folder i.e., where your python script is running from.

4. Get the data

Finally, we’re on the last leg!

4.1 Go ahead and type the following in, followed by “shift+enter” to execute:

webproperty=account[' https://www.example.com/']Important: make sure that the domain is exactly how it’s entered in GSC, including www and/or trailing slash.

exampleGSC = webproperty.query.range('2020-09-01', '2020-09-02').dimension('query').get()(press “shift+enter” to execute)

4.2 Choose your date range and dimension. You’ll see the above example includes two days of data and ‘query’ as a dimension. If you want to access more dimensions, I cover it later on in this guide, so keep reading.

4.3 Make it a data frame (don’t worry what this is, but you need it to be able to export to the CSV file) type the following and press “shift+enter” to execute:

exampleBVreport = pd.DataFrame(data=exampleGSC)4.4 And, finally, let’s export it:

exampleBVreport.to_csv('exampleCSV.csv', index=False)(press “ctrl+enter” to execute)

4.5 Check your project folder. You’ll find the full data export in exampleCSV.csv there.

That’s it. You’ve done it!

Note: This will help you to differentiate between the projects you’re working on.

Bonus: making it easily reusable

Now that you have everything set up and you know your code is working, you can save it in the *.py (Python) file, which will allow you to reuse it very easily. Save the code as a *.py file and place it in your project folder.

Note: text following “#” is a comment explaining what the next step in the code is doing.

# Import Pandas

import pandas as pd

# Import Search Console wrapper

import searchconsole

# Authenticate with GSC (don't forget to drop both JSON files into the same folder)

account=searchconsole.authenticate(client_config='client_secrets.json', credentials='credentials.json')

# Connect to the GSC property

webproperty= account['https://www.example.com/']

# Set your dates and dimensions

exampleGSC = webproperty.query.range('2020-09-01', '2020-09-02').dimension('query').get()

# Make it a Data Frame

exampleBVreport = pd.DataFrame(data=exampleGSC)

# Export to *.csv

exampleBVreport.to_csv('exampleCSV.csv', index=False)

Don’t forget to:

- Place your client_secrets.json and credentials.json files into your project folder.

- Replace the webproperty value with the exact account full domain URL.

- Set your dates.

- You can change the exported CSV file name if you want (this is optional).

Then you’ll need to navigate to the folder where the script is saved in your Terminal. Here are a couple of quick tricks for moving around in your file system in the Terminal:

- If you type

cd ..(that’s two periods), you’ll go to the directory above the one you’re currently in. - If you type

ls(that’s an L, not I), you will list all files in the category (useful when you forget what the next category you want to go into is). - If you type

cd /folder/deeperfolderyou will navigate to the specified place in the path (in this case, ‘deepfolder’). If you want, you can move one folder at a time.

Note: if you want to learn more about how to navigate in the Terminal, this article can be a good starting point.

When you navigate to the category within the Terminal that you need, execute the command:

python yourfilename.pyIt will automatically go through all the steps of your script and export the file to your folder. See how fast you can do this?

What about other GSC information?

You can export four different dimensions with this API access:

- ‘query’

- ‘page’

- ‘device’

- ‘country’ (will export country code)

If you want to do that, you’ll need to update the dimensions line of your code, as shown below (see bold text):

# Import Pandas

import pandas as pd

# Import Search Console wrapper

import searchconsole

# Authenticate with GSC (don't forget to drop both JSON files into the same folder)

account=searchconsole.authenticate(client_config='client_secrets.json', credentials='credentials.json')

# Connect to the GSC property

webproperty= account['https://www.example.com/']

# Set your dates and dimensions

exampleGSC = webproperty.query.range('2020-09-01', '2020-09-02').dimension('query','page','device','country').get()

# Make it a Data Frame

exampleBVreport = pd.DataFrame(data=exampleGSC)

# Export to *.csv

exampleBVreport.to_csv('exampleCSV.csv', index=False)

Note: you can add or remove dimensions to make different combinations depending on your needs, but remember that the more dimensions you include, the bigger the export files become.

Here are the files for you to download and reuse

Download python files for query export and all available dimensions.

With a little prep to set up the environment, you can not only speed up the exports of your Google Search Console date but also circumvent the 1,000-row export limit. You can reuse the same code every time by just changing the website you’re working on and parameters needed to export.

Please drop a comment below if you have any questions, I’ll be more than happy to help you out.