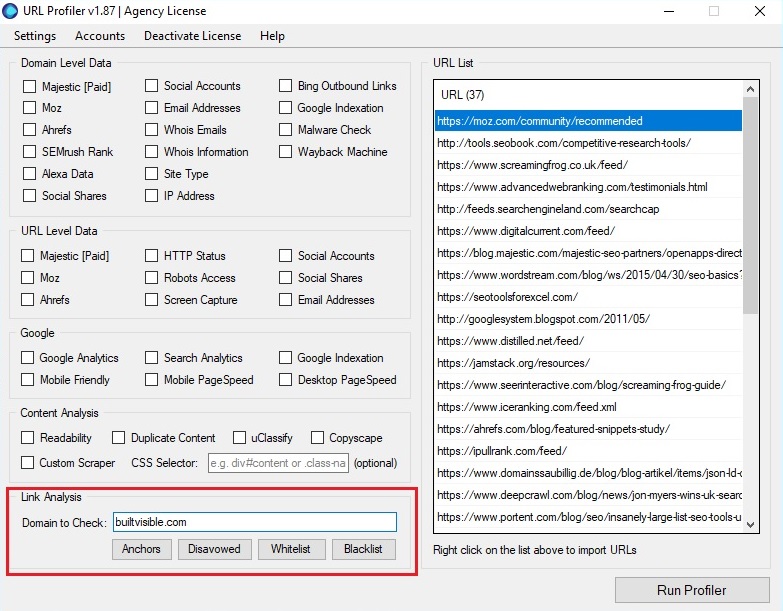

URL Profiler:

One of the easiest and most accessible tools to filter out links is URL Profiler. The cost of a license is relatively low and it is capable of handling large amounts of data.

To identify dead links, load the URL list and add in the domain that you want to analyse.

Check against within the ‘Link Analysis’ section and click “Run Profiler”.

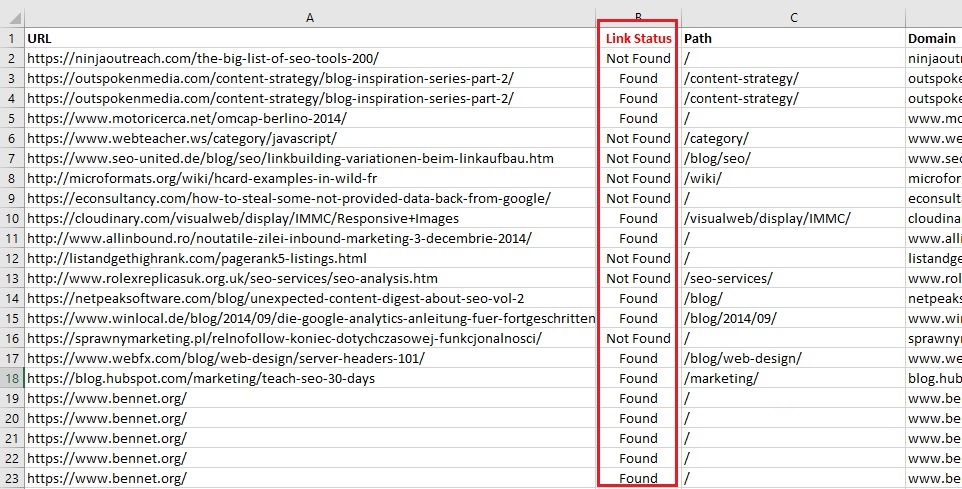

Once the crawl is complete, the results can be viewed in the standard export. To be specific, the Link Status column, highlighted below.

If you encounter large number of server errors, you might want to consider slowing down your crawl or changing the user-agent to GoogleBot.

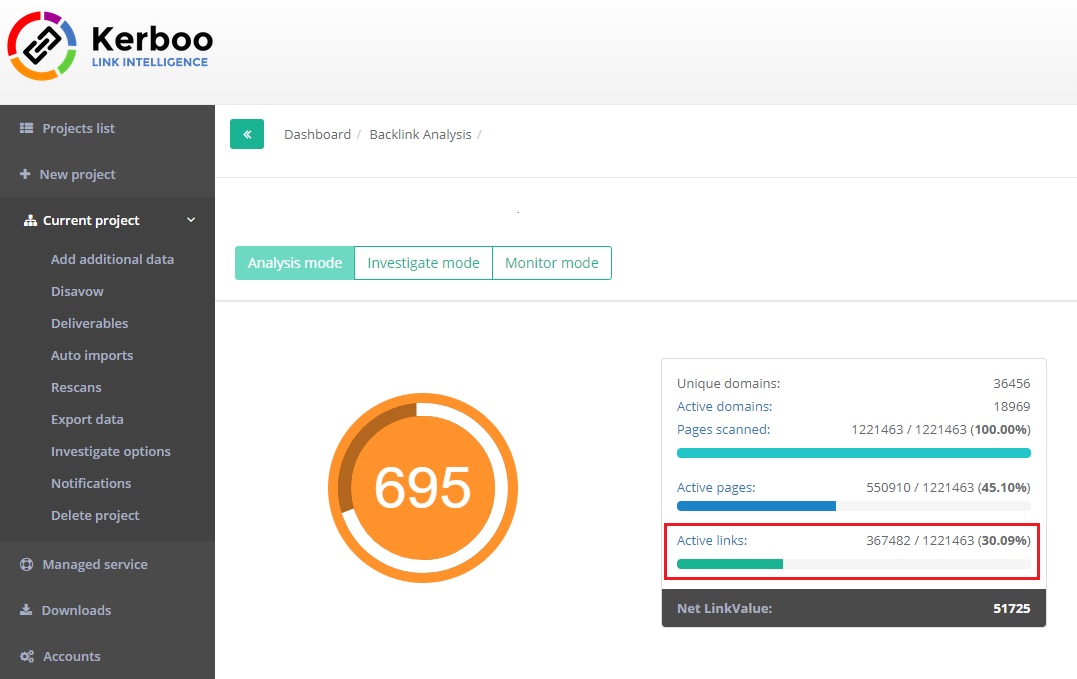

Kerboo:

Another excellent option, and the tool we typically use internally for link auditing, is Kerboo. Kerboo is a specialist tool for backlink analysis and has real utility whether you are auditing existing links or prospecting for new opportunities.

Kerboo automatically merges link profile data which can be imported via the APIs of Majestic, Ahrefs and Search Console on an ongoing basis, or manually uploaded as a list of URLs. As it is cloud based, Kerboo won’t hog all of your PC’s processing power, which on large profiles can take days to complete.

In the above example, 70% of the complete link profile – gathered from Majestic, Ahrefs, Search Console and MOZ – was no longer live.

One of the advantages of Kerboo over other tools, is that it allows scanning for multiple domains. This can come handy if your website went through a domain migration in the past and you would like to scan for links pointing to the legacy version as well.

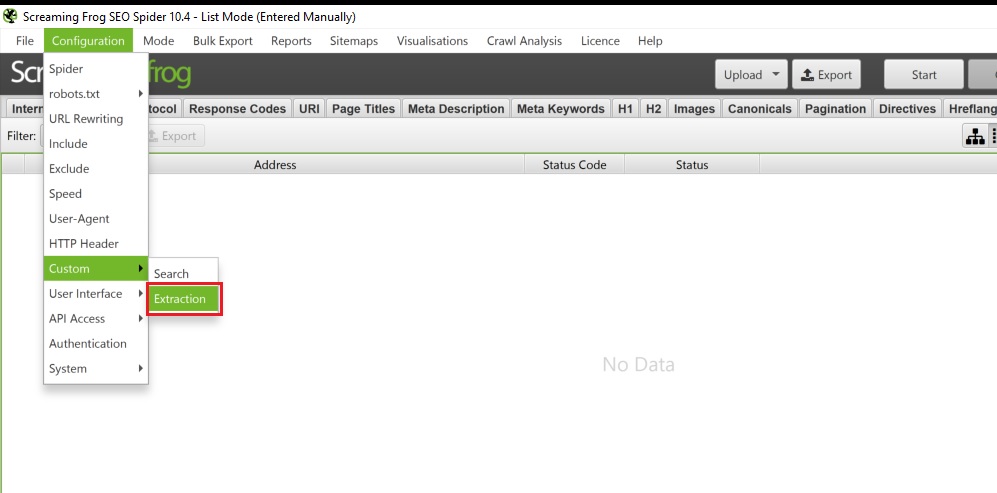

Screaming Frog:

Identifying pages with links no longer live is also possible via the Swiss army knife of SEO – Screaming Frog. Using Screaming Frog’s custom extraction function and a relatively simple RegEx rule can establish whether the crawled page contains a link to the site under review.

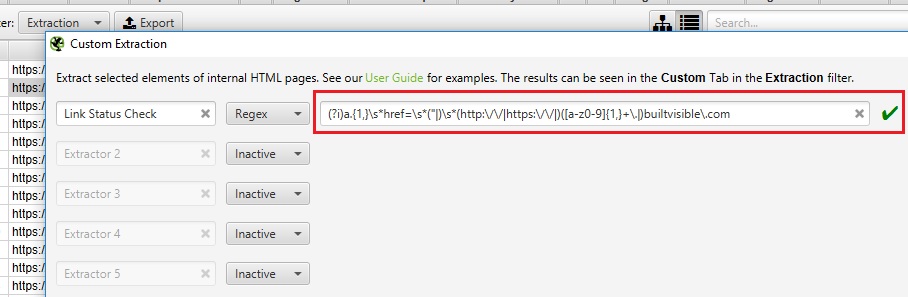

The RegEx rule below, once inserted into the extraction field within Screaming Frog, will list any page that features a valid link pointing to builtvisible.com. It will filter out links with incorrect HTML syntax and look for links pointing to subdomains as well (“www.”,”testing.”, etc.).

Our example:

(?i)a.{1,}\s*href=\s*("|)\s*(http:\/\/|https:\/\/|)([a-z0-9]{1,}+\.|)builtvisible\.com

Regex template:

(?i)a.{1,}\s*href=\s*("|)\s*(http:\/\/|https:\/\/|)([a-z0-9]{1,}+\.|)[Example Domain]\.[Domain TLD]

Please note, in order to maintain valid regex syntax, the “.” characters must be escaped by inserting a “\” sign in front of them when entering the domain’s TLD.

To make sure you get the most accurate results possible, it’s also recommended that the crawls run with the following settings:

- Always Follow Redirects

- 5xx Response Retries (changed from 0 to 5)

- Crawl Speed (changed to 5 URLs/s from unlimited)

- User Agent (changed to Google-bot from Screaming Frog Bot)

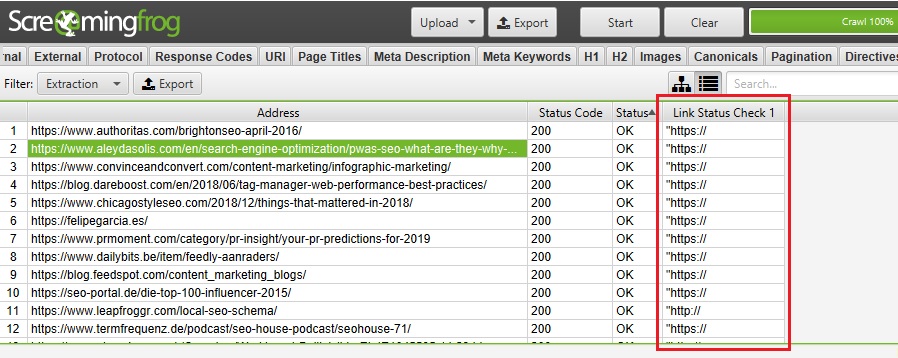

After Screaming Frog has completed its crawl the results should look like this within the extraction tab:

If the “Link Status Check 1” field is populated, it means that a link pointing to the defined domain has been spotted.

It’s important to keep in mind that – unlike the previous tools – Screaming Frog is quite memory intensive using the default storage mode. If you are scanning several thousand links, it’s worth switching the memory mode to internal storage (HDD/SSD) instead. This will help to reduce system stress in exchange for speed.

Taking things further

Although this post primarily focused on reviewing the status of a link for auditing purposes, there are so many other uses for this approach. As an example, when trying to recover lost link equity, knowing what links are live can drastically reduce your list of recommended redirects.

Regardless of what you use this for, I hope the techniques discussed in this post save you some time – so you can concentrate on analysing the links that matter.

Zgred

Take a look on Clusteric.com :) Nice software from Poland, also available in English version. Very useful to prepare Onsite and Offsite analysis.

Dan Smullen

Screaming frog and that custom regex extraction to do this is the business. Thanks for sharing. Another cool way of using SF and this technique is to grab all brand mentions from google news to check for unlinked brand mentions at scale! A little off topic, but popped to mind when reading this.

Consulenza

Another pro for Screaming Frog. I just love this software more and more!

Lisa George

Very interesting post on Link Analysis.

Awesome tips and step by step explanation. Really appreciate the way you have written and explained.

I am really gonna apply this for the future. Worth reading it.

Thanks for sharing it with us.

Good work..!!