What does WGET Do?

Once installed, the WGET command allows you to download files over the TCP/IP protocols: FTP, HTTP and HTTPS.

If you’re a Linux or Mac user, WGET is either already included in the package you’re running or it’s a trivial case of installing from whatever repository you prefer with a single command.

Unfortunately, it’s not quite that simple in Windows (although it’s still very easy!).

To run WGET you need to download, unzip and install manually.

Install WGET in Windows 10

Download the classic 32 bit version 1.14 here or, go to this Windows binaries collection at Eternally Bored here for the later versions and the faster 64 bit builds.

Here is the downloadable zip file for version 1.2 64 bit.

If you want to be able to run WGET from any directory inside the command terminal, you’ll need to learn about path variables in Windows to work out where to copy your new executable. If you follow these steps, you’ll be able to make WGET a command you can run from any directory in Command Prompt.

Run WGET from anywhere

Firstly, we need to determine where to copy WGET.exe.

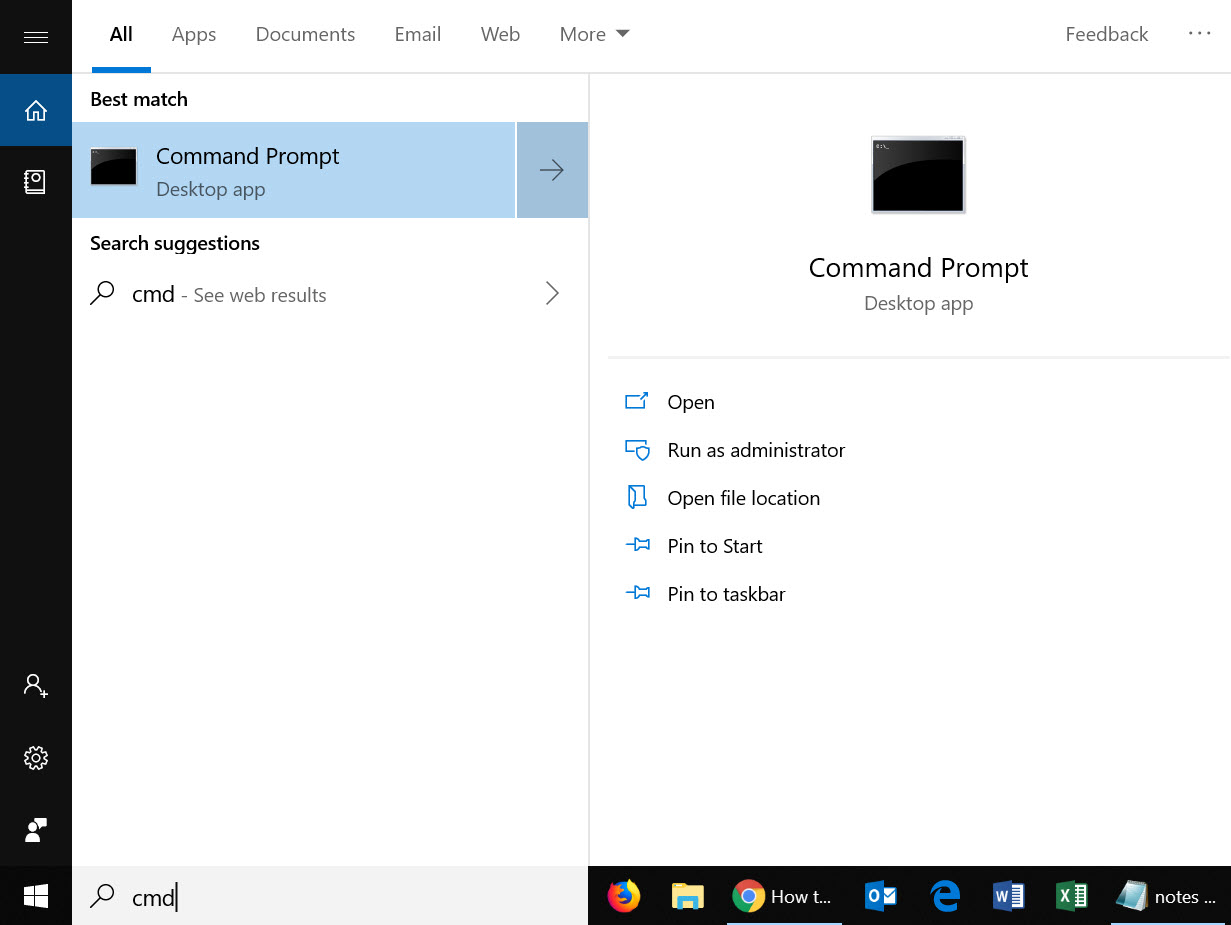

After you’d downloaded wget.exe (or unpacked the associated distribution zip files) open a command terminal by typing “cmd” in the search menu:

We’re going to move wget.exe into a Windows directory that will allow WGET to be run from anywhere.

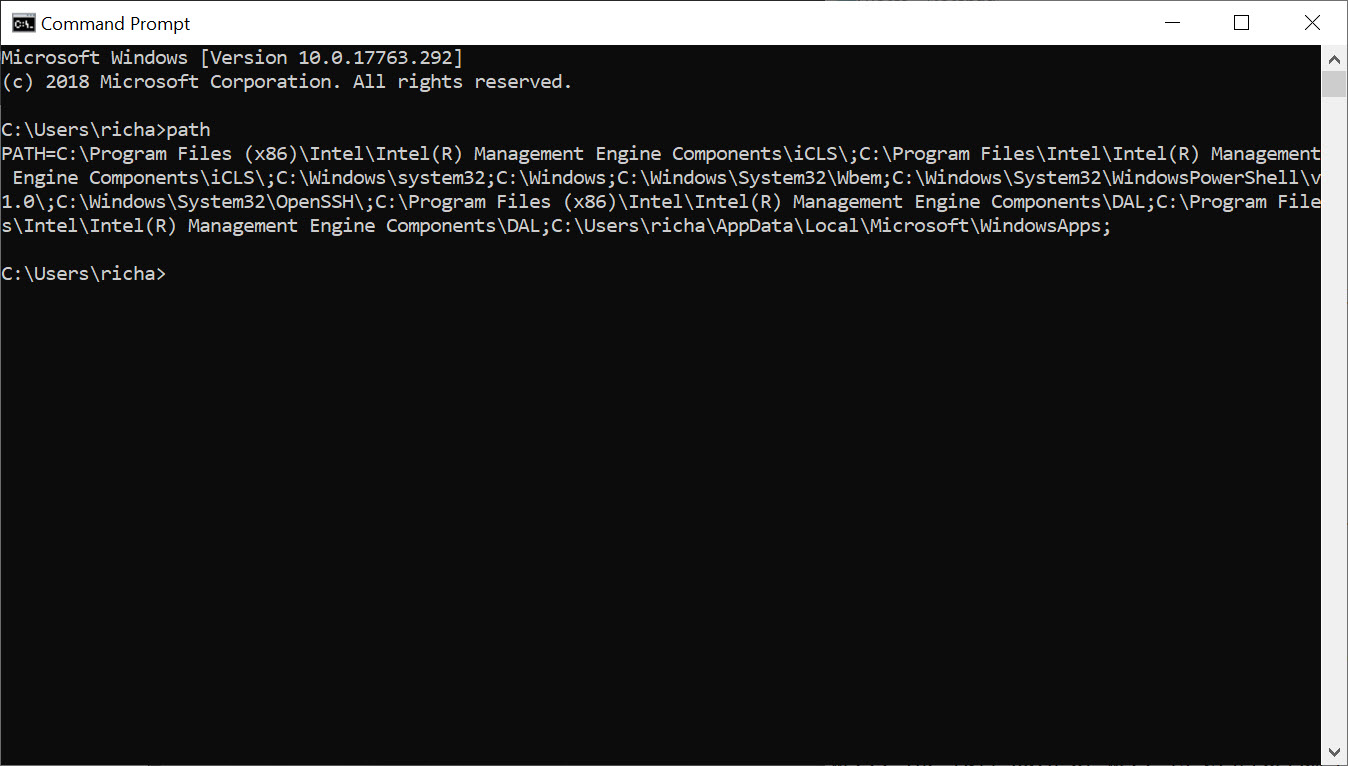

First, we need to find out which directory that should be. Type:

path

You should see something like this:

Thanks to the “Path” environment variable, we know that we need to copy wget.exe to the c:\Windows\System32 folder location.

Go ahead and copy WGET.exe to the System32 directory and restart your Command Prompt.

Restart command terminal and test WGET

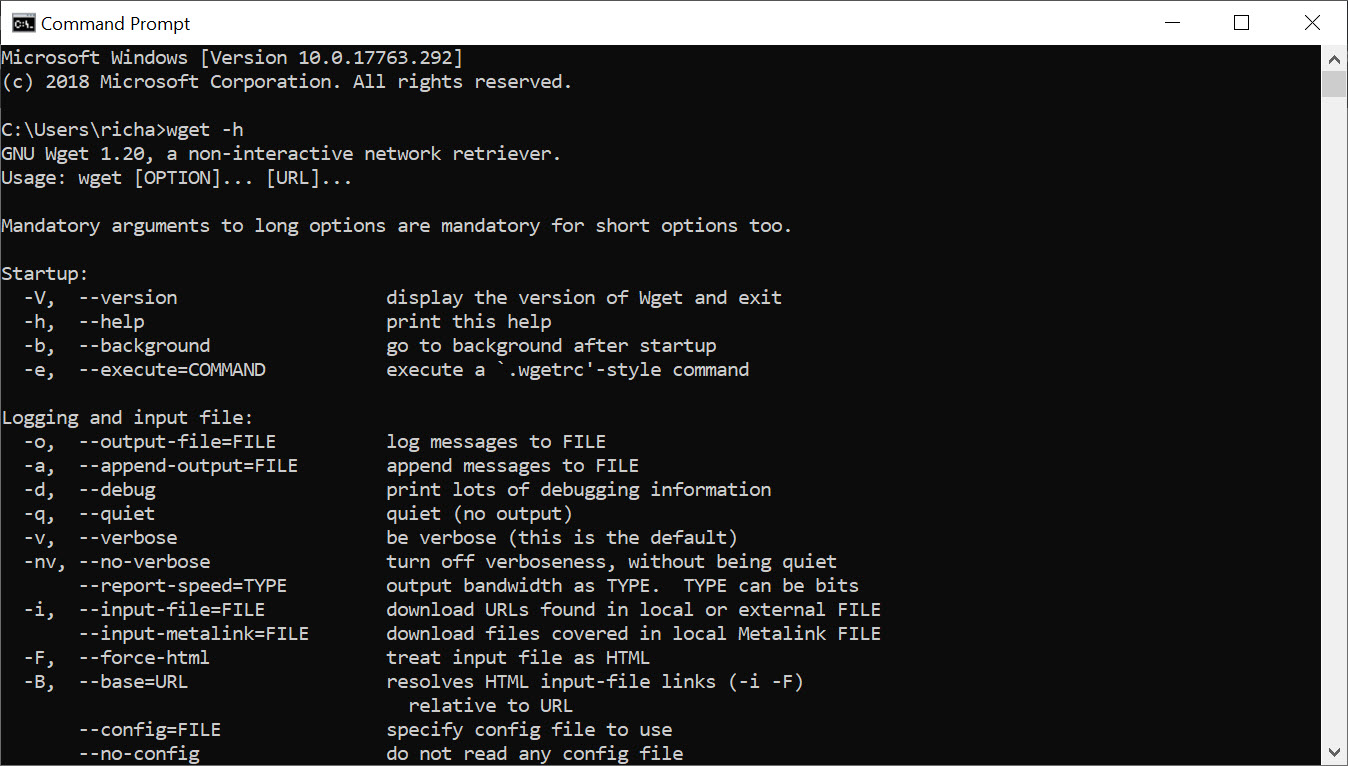

If you want to test WGET is working properly, restart your terminal and type:

wget -h

If you’ve copied the file to the right place, you’ll see a help file appear with all of the available commands.

So, you should see something like this:

Now it’s time to get started.

Get started with WGET

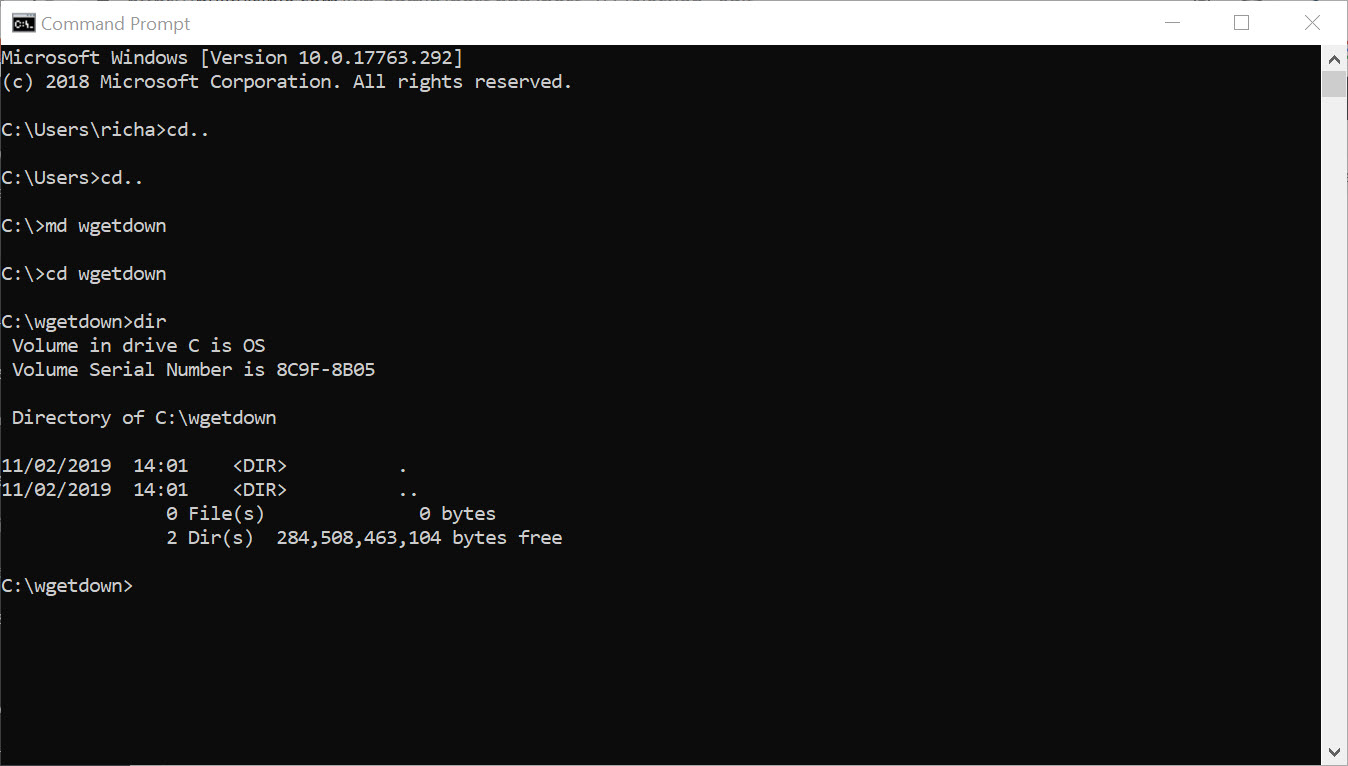

Seeing that we’ll be working in Command Prompt, let’s create a download directory just for WGET downloads.

To create a directory, we’ll use the command md (“make directory”).

Change to the c:/ prompt and type:

md wgetdown

Then, change to your new directory and type “dir” to see the (blank) contents.

Now, you’re ready to do some downloading.

Example commands

Once you’ve got WGET installed and you’ve created a new directory, all you have to do is learn some of the finer points of WGET arguments to make sure you get what you need.

The Gnu.org WGET manual is a particularly useful resource for those inclined to really learn the details.

If you want some quick commands though, read on. I’ve listed a set of instructions to WGET to recursively mirror your site, download all the images, CSS and JavaScript, localise all of the URLs (so the site works on your local machine), and save all the pages as a .html file.

To mirror your site execute this command:

wget -r https://www.yoursite.com

To mirror the site and localise all of the urls:

wget --convert-links -r https://www.yoursite.com

To make a full offline mirror of a site:

wget --mirror --convert-links --adjust-extension --page-requisites --no-parent https://www.yoursite.com

To mirror the site and save the files as .html:

wget --html-extension -r https://www.yoursite.com

To download all jpg images from a site:

wget -A "*.jpg" -r https://www.yoursite.com

For more filetype-specific operations, check out this useful thread on Stack.

Set a different user agent:

Some web servers are set up to deny WGET’s default user agent – for obvious, bandwidth saving reasons. You could try changing your user agent to get round this. For example, by pretending to be Googlebot:

wget --user-agent="Googlebot/2.1 (+https://www.googlebot.com/bot.html)" -r https://www.yoursite.com

Wget “spider” mode:

Wget can fetch pages without saving them which can be a useful feature in case you’re looking for broken links on a website. Remember to enable recursive mode, which allows wget to scan through the document and look for links to traverse.

wget --spider -r https://www.yoursite.com

You can also save this to a log file by adding this option:

wget --spider -r https://www.yoursite.com -o wget.log

Enjoy using this powerful tool, and I hope you’ve enjoyed my tutorial. Comments welcome!